Old Papers Never Die – They Only Fade Away……

Interacting with the Computer: a Framework

John Long Comment 1

The title remains an appropriate one. However, given its subsequent references to: ‘domains’; ‘applications’; ‘application domains’; ‘tasks’ etc, it must be assumed that the interaction is: ‘to do something’; ‘to perform tasks’; ‘to achieve aims or goals’; or some such. Further modeling of such domains/applications, beyond that of text processing, would be required for any re-publication of the paper and in the light of advances in computing technology – see earlier. The issue is pervasive – see also Comments 6, 35, 37, 40 and 41.

Comment 2

‘A Framework’ is also considered to be appropriate. Better than ‘a conception’, which promises greater completeness, coherence and fitness-for-purpose (unless, of course, these criteria are explicitly taken on-board). However, the Framework must explicitly declare and own its purpose, as later set out in the paper and referenced in Figure 1. See also Comments 15, 19, 27, and 42.

J. Morton, P. Barnard, N. Hammond* and J.B. Long

M.R.C. Applied Psychology Unit, Cambridge, England *also IBM Scientific Centre, Peterlee, England

Recent technological advances in the development of information processing systems will inevitably lead to a change in the nature of human-computer interaction.

Comment 3‘Recent technological advances’ in 1979 centred around the personal, as opposed to the main-frame, computer. To-day there are a plethora of advances in computing technology – see Commentary Introduction earlier for a list of examples. A re-publication of the paper would require a major up-date to address these new applications, as well as their associated users. Any up-date would need to include additional models and tools for such address, as well as an assessment of the continued suitability of the models and tools, proposed in the ’79 paper.

Direct interactions with systems will no longer be the sole province of the sophisticated data processing professional or the skilled terminal user. In consequence, assumptions underlying human-system communication will have to be re-evaluated for a broad range of applications and users. The central issue of the present paper concerns the way in which this re-evaluation should occur.

First of all, then, we will present a characterisation of the effective model which the computer industry has of the interactive process.

Comment 4We contrasted our ’79 models/theories with a single computer industry’s model. To-day, there are many types of HCI model/theory. A recent book on the subject listed 9 types of ‘Modern Theories’ and 6 types of ‘Contemporary Theories’ (Rogers, 2012). The ‘industry model’ has, of course, itself evolved and now takes many forms (Harper et al., 2008). Any re-publication of the ’79 paper would have to specify both with which HCI models/theories it wished to be contrasted and with what current industry models.

The shortcoming of the model is that it fails to take proper account of the nature of the user and as such can not integrate, interpret, anticipate or palliate the kinds of errors which the new user will resent making. For remember that the new user will avoid error by adopting other means of gaining his ends, which can lead either to non-use or to monstrously inefficient use. We will document some user problems in support of this contention and indicate the kinds of alternative models which we are developing in an attempt to meet this need.

The Industry’s Model (IM)

The problem we see with the industry’s model of the human-computer interaction is that it is computer-centric. In some cases, as we shall see, it will have designer-centric aspects as well.

Comment 5In 1979, all design was carried out by software engineers. Since then, many other professionals have become involved – initially psychologists, then HCI-trained practitioners, graphic designers, ethnomethodologists, technocratic artists etc. However, most design (as opposed to user requirements gathering or evaluation) is still performed by software engineers. Any re-publication of this paper would have to identify the different sorts of design activity, to assess their relative contribution to computer- and designer-centricity respectively and the form of support appropriate to each, which it might offer – see also Comments 14, 15, 21 (iv) and (v), 27 and 41 (iv).

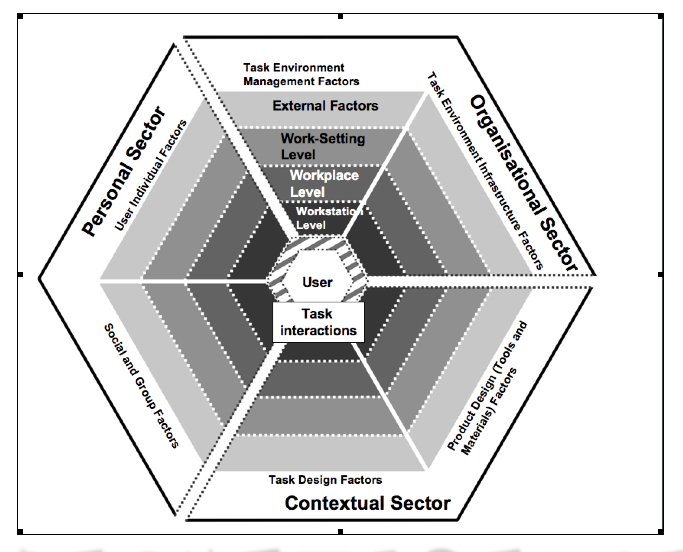

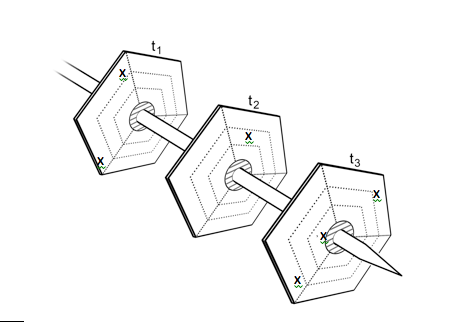

To start off with, consider a system designed to operate in a particular domain of activity.

Comment 6Any re-published paper would have to develop more further the concept of ‘domain’ (see Comment 1). The development would need to address: 1. The computer’s version of the domain and its display thereof. There is no necessary one-to-one relationship (consider the pilot alarm systems in the domain of air traffic management). Software engineer designers might specify the former and HCI designers the latter; and 2. To what extent the domain is an ‘image of the world and its resources’. See Comments 1, 35, 37, 40 and 41.

In the archetypal I.M. the database is neutralised in much the same kind of way that a statistician will ritually neutralise the data on which he operates, stripping his manipulation of any meaning other than the purely numerical one his equations impose upon the underlying reality. This arises because the only version of the domain which exists at the interface is that one which is expressed in the computer. This version, perhaps created by an expert systems analyst on the best logical grounds and the most efficient, perhaps, for the computations which have to be performed, becomes the one to which the user must conform. This singular and logical version of the domain will, at best, be neutral from the point of view of the user. More often it will be an alien creature, isolating the user and mocking him with its image of the world and its resources to which he must haplessly conform.

Florid language? But listen to the user talking.

Comment 7The ’79 user data are now quite out of date, both in terms of their content, means of acquisition and associated technology, compared with more recent data. However, current user-experience continues to have much in common with that of the past. Up-dated data are required to confirm this continuity.

“We come into contact with computer people, a great many of whom talk a very alien language, and you have constant difficulty in trying to sort out this kind of mid-Atlantic jargon.”

“We were slung towards what in my opinion is a pretty inadequate manual and told to get on with it”

“We found we were getting messages back through the terminal saying there’s not sufficient space on the machine. Now how in Hell’s name are we supposed to know whether there’s sufficient space on the machine?” .

In addition the industry’s model does not really include the learning process; nor does it always take adequate note of individual’s abilities and experience:

“Documentation alone is not sufficient; there needs to be the personal touch as well . ”

“Social work being much more of an art than a science then we are talking about people who are basically not very numerate beginning to use a machine which seems to be essentially numerate.”

Even if training is included in the final package it is never in the design model. Is there anyone here, who, faced with a design choice asked the questions “Which option will be the easiest to describe to the naive user? Which option will be easiest to understand? Which option will be easiest to learn and remember?”

Comment 8Naive users, of course, continue to exist to-day. However, there are many more types of users than naive and professional of interest to current HCI researchers. Differences exist between users of associated technologies (robotic versus ambient); from different demographics (old versus young); at different stages of development (nursery versus teenage children); from different cultures (developed versus less developed) etc. These different types of user would need some consideration in any re-publication.

Let us note again the discrepancy between the I.M. view of error and ours . For us errors are an indication of something wrong with the system or an indication of the way in which training should proceed. In the I.M. errors are an integral part of the interaction. For the onlooker the most impressive part of a D.P. interaction is not that it is error free but that the error recovery procedures are so well practised that it is difficult to recognise them for what they are .

Comment 9As well as this important distinction, concerning errors, they need to be related to ‘domains’, applications’ and ‘effectiveness’ or ‘performance’ and not just user (or indeed computer) behaviour. See Comment 6 earlier and Comments 35, 36, 37 and 38 later.

Errorless performance may not be acceptable (consider air traffic expedition). Errorful behaviour may be acceptable (consider some e-mail errors). A re-published ’79 paper would have to take an analytic/technical(that is Framework grounded) view of error and not just a simple adoption of users’ (lay-language) expression. This problem is ubiquitous in HCI, both past and present.

We would not want it thought that we felt the industry was totally arbitrary . There are a number of natural guiding principles which most designers would adhere to. See also Comment 16.

Comment 10We contrast here two types of principle, which designers might adhere to: 1. IM principles, as ‘intuitive, non-systematic, not totally arbitrary’; and our proposed principles, as ‘systematic’. In the light of this contrast, we need to set out clearly: 1. What and how are our principles ‘systematic’? and 2. How does this systematicity guarantee/support better design?

Note that in Figure 1 later, there is an ‘output to system designers’. Is this output expressed in (systematic) principles? If not, what would be its form of expression? Any form of expression would raise the same issues raised earlier for ‘sysematic principles’.

We do not anticipate meeting a system in which the command DESTROY has the effect of preserving the information currently displayed while PRESERVE had the effect of erasing the operating system. However , the principles employed are intuitive and non-systematic. Above all they make the error of embodying the belief that just as there can only be one appropriate representation of the domain, so there is only one kind of human mind.

A nice example of a partial use of language constraints is provided by a statistical package called GENSTAT. This package permits users to have permanent userfiles and also temporary storage in a workfile. The set of commands associated with these facilities are :

PUT – copies from core to workfile

GET – copies from workfile to core

FILE – defines a userfile

SAVE – copies from workfile to userfile

FETCH – copies from userfile to workfile

The commands certainly have the merit that they have the expected directionality with respect to the user. However to what extent do, for example, FETCH and GET relate naturally to the functions they have been assigned? No doubt the designers have strong intuitions about these assignments. So do users and they do not concur. We asked 40 people here at the A. P.U. which way round they thought the assignments should go: nineteen of these agreed with the system designers, 21 went the 0ther way . The confidence levels of rationalisations were very convincing on both sides!

The problem then, is not just that systems tend to be designer-centric but that the designers have the wrong model either of the learning process or of the non-D.P. users’ attitude toward error. A part-time user is going to be susceptible to memory failure and, in particular, to interference from outside the computer system. du Boulay and O’ Shea [I] note that naive users can use THEN in the sense of ‘next’ rather then as ‘implies’. This is inconceivable to the IM for THEN is almost certainly a full homonym for most D.P. and the appropriate meaning the appropriate meaning thoroughly context-determined .

Comment 11The GENSTAT example was so good for our purposes, that it has taken considerable reflection to wonder if there really is a natural language solution, which would avoid memory failure and/or interference. It is certainly not obvious.

The alternative would be to add information to a menu or somesuch (rather like in our example). But this is just the sort of solution IM software engineers might propose. Where would that leave any ‘systematic’ principles’? – see Comment 10 earlier.

An Alternative to the Industry Model

The central assumption for the system of the future will be ‘systems match people’ rather than ‘people match systems’. Not entirely so, as we shall elaborate, for in principle, the capacity and perspectives of the user with respect to a task domain could well change through interaction with a computer system.

Comment 12In general, the alternative aims to those of the IM promise well. The mismatch, however, seems to be expressed at a more abstract level than that of the ‘task domain’ – the ‘alien creature, isolating the user and mocking him with its image of the world and its resources to which he must haplessly conform’ – see earlier in the paper. Suppose the mismatch is at this specific level, where does this leave, for example, the natural language mismatch? Of course, we could characterise domain-specific mismatches, for example, the contrasting references to ambient environment in air- and sea-traffic management, although for professional, not for naive users. Such mismatches would require a form of domain model absent from the original paper. However, the same issue arises in the domains of letter writing and planning by means of ‘to do’ lists. Either way, the application domain mismatch needs to be addressed, along with that of natural language.

But the capacity to change is more limited than the variety available in the system .

Comment 13The contrast ‘personal versus mainframe computer’ and the parallel contrast ‘occasional/naive versus professional user’ served us very well in ’79. But the explosion of new computing technology (see Comment 3 earlier) and associated users requires a more refined set of contrasts. There are, of course, still occasional naive users; but these are mainly in the older population and constitute a modest percentage of current users. However, with demographic changes and a longer-living older population, it would not be an uninteresting sub-set of all present users. A re-publication, which wanted to restrict its range in the manner of the ’79 paper, might address ‘older users’ and domestic/personal computing technology. An interesting associated domain might be ‘computer-supported co-operative social and health care’. We could be our own ‘subjects, targets, researchers, and designers’, as per Figure 1 later.

Our task, then, is to characterise the mismatch between man and computer in such a way that permits us to direct the designer’s effort.

Comment 14Directing the designer’s efforts are strong words and need to be linked to the notion and guarantee of principles – see Comment 10 and Figure 1 ‘output to designers’. Such direction of design needs to be aligned with scientific/applied scientific or engineering aims (see Comments 15 and 18).

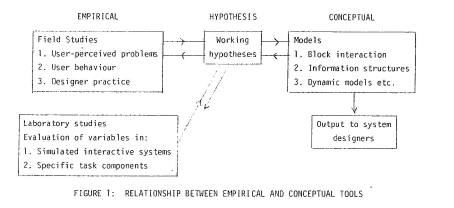

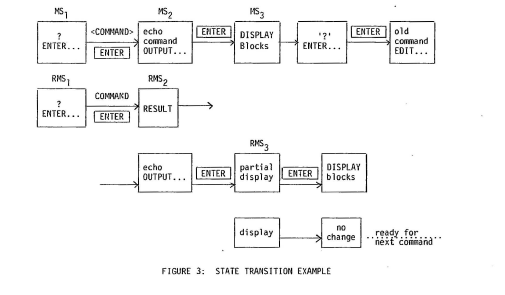

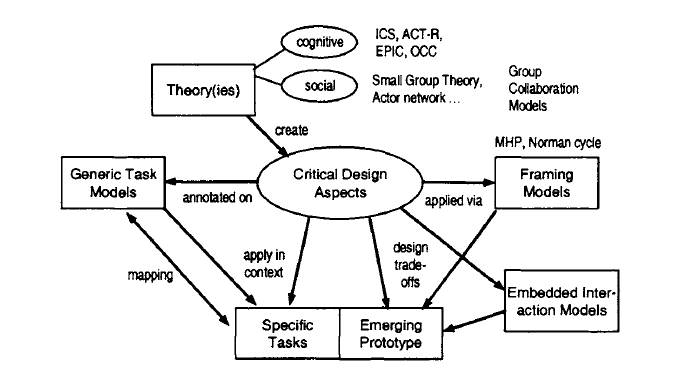

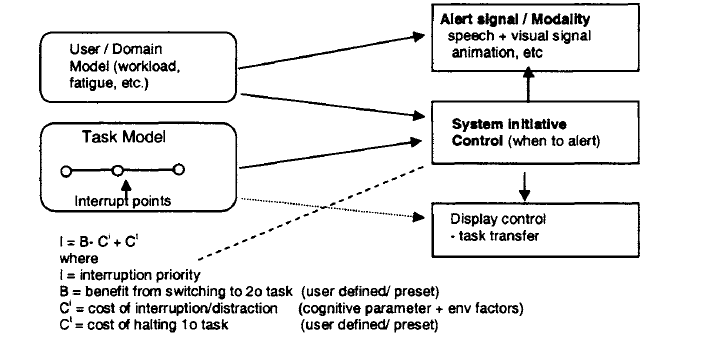

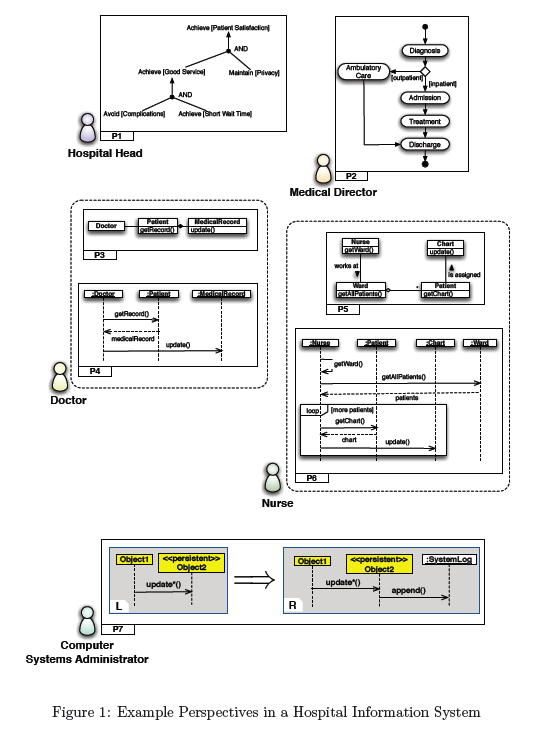

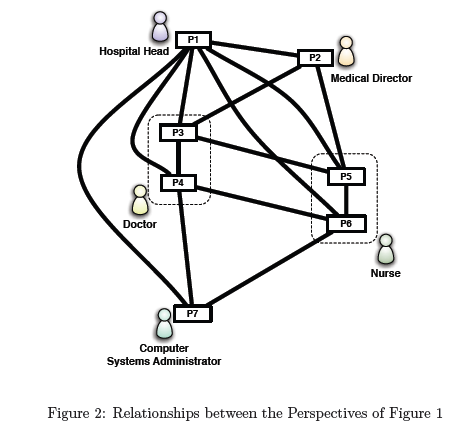

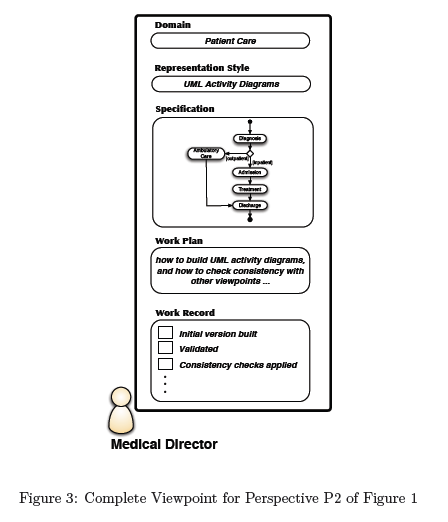

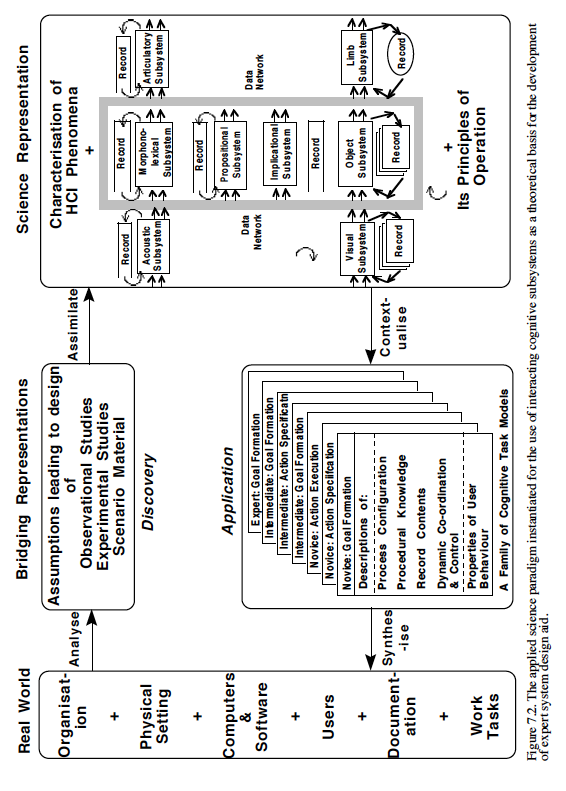

In doing this we are developing two kinds of tool, conceptual and empirical. These interrelate within an overall scheme for researching human-computer interaction as shown in Figure 1.

Comment 15

Figure 1 raises many issues:

1. Empirical studies require their own form of conceptualisation, for example: ‘problems’; ‘variables’; ‘tasks’ etc. These concepts would need specification before they could be conceptualised in the form of multiple models and operationalised for system designers.

2. What is the relationship between ‘hypothesis’ and the thories/knowledge of Psychology? Would the latter inform the former? If so, how exactly? This remains an endemic problem for applied science (see Kuhn, 1970).

3. Are ‘models’, as represented here, in some (or any) sense Psychological theories or knowledge? The point needs to be clarified – see also Comment 15 (1) earlier.

4. What might be the ‘output to system designers’ – guidelines; principles; systematic heuristics; hints and tips; novel design solutions; methods; education/training etc? See also Comment 14.

5. How is the ‘output to system designers’ to be validated? There is no arrow back to either ‘models’ or ‘working hypotheses’. At the very least, validation requires: conceptualisation; operationalisation; test; and generalisation. But with respect to what – hypotheses for understanding phenomena or with respect to designing artefacts?

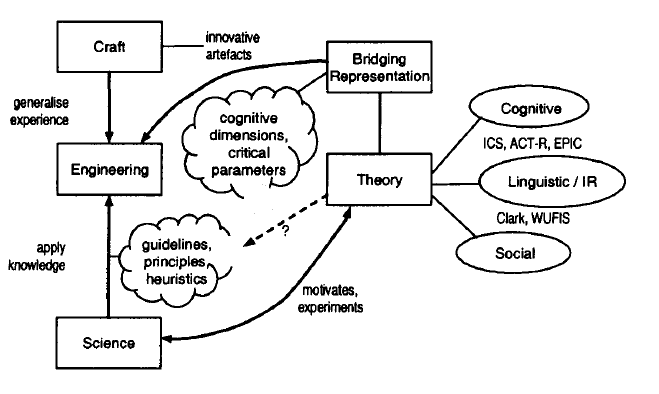

Relating Conceptual and Empirical Tools

Comment 16The relationship between conceptual and analytic tools and their illustration reads like engineering. In ’79, I thought that we were doing ‘applied science’ (following in the footsteps of Donald Broadbent, the MRC/APU’s director in 1979). The distinction between engineering and applied science needs clarification in any republished version of the original paper.

Interestingly enough, Card, Moran and Newell (1983) claimed to be doing ‘engineering’. Their primary models were the Human Information Processing (HIP) Model and the Goals, Operators, Methods and Strategies (GOMS) Model. There is some interesting overlap with some of our multiple models; but also important differences. One option for a republished paper would be to keep to the ’79 multiple models. An alternative option would to augment the HIP and GOMS with the ’79 multiple models (or vice versa), to offer a (more) complete expression of either approach taken separately.

The conceptual tools involve the development of a set of analytic frameworks appropriate to human computer interaction. The empirical tools involve the development of valid test procedures both for the introduction of new systems and the proving of the analytic tools. The two kinds of tool are viewed as fulfilling functions comparable to the role of analytic and empirical tools in the development of technology. They may be compared with the analytic role of physics, metallurgy and aerodynamics in the development of aircraft on the one hand and the empirical role of a wind tunnel in simulating flight on the other hand.

Empirical Tools

The first class of empirical tool we have employed is the observational field study, with which we aim to identify some of the variables underlying both the occasional user’s perceptions of the problems he encounters in the use of a computer system, and the behaviour of the user at the terminal itself.

Comment 17Observational field studies have undergone considerable development since ’79. Many have become ethnomethodological studies, to understand the context of use, others have become front-ends to user-centred design methodologies, intended to be conducted in parallel to those of software engineering. Neither sort of development is addressed by our original paper. Both raise numerous issues, including: the mutation of lay-language into technical language; the relationship between user opinions/attitudes and behaviour; the relationship between the simulation of domains of application and experimental studies; the integration of multiple variables into design; etc.

The opinions cited above were obtained in a study of occasional users discussing the introduction and use of a system in a local government centre [2]. The discussions were collected using a technique which is particularly free from observer influence [3 ].

In a second field study we obtained performance protocols by monitoring users while they solved a predefined set of problems using a data base manipulation language [4 ]. We recorded both terminal performance and a running commentary which we asked the user to make, and wedded these to the state of the machine to give a total picture of the interaction. The protocols have proved to be a rich source of classes of user problem from which hypotheses concerning the causes of particular types of mismatch can be generated.

Comment 18HCI has never given the concept of ‘classes of user problem’ the attention that it deserves. Clearly, HCI has a need for generality (see Comment 10, concerning (systematic) principles with their implications of generalisation). Of course, generalising over user problems is critical; but so more comprehensively is generalising over ‘design problems’. The latter might express the ineffectiveness of users interacting with computers to perform tasks (or somesuch). The original paper does not really say much about generalisation – its conceptualisation; operationalisation; test; and – taken together – validation. Any republication would have to rise to this challenge.

The concept of ’cause’ here is redolent of science, for example, as in Psychology. See also Comment 18, as concerns phenomena and Comment 15 for a contrast with engineering. Science and engineering are very different disciplines. Any re-publication would have to address this difference and to locate the multiple models and their application with respect to it.

There is thus a close interplay between these field studies, the generation of working hypotheses and the development of the conceptual frameworks. We give some extracts from this study in a later section.

Comment 20This claim would hold for both a scientific (or applied scientific) and an engineering endeavour. See also Comments 15 and 18 earlier. However, both would be required to align themselves with Figures 1 and 2 of the original paper.

A third type of empirical tool is used to test specific predictions of the working hypothesis.

Comment 21The testing of predictions (which in conjunction with the explanation of phenomena, together constituting understanding) suggests the notion of science (see Comments 18 and 19), which can be contrasted with the prescription of design solutions (which in conjunction with the diagnosis of design problems, together constituting design of artefacts), as engineering (see Comment 15). The difference concerning the purpose of multiple models needs clarification.

The tool is a multi-level interactive system which enables the experimenter to simulate a variety of user interfaces, and is capable of modeling and testing a wide range of variables [5]. It is based on a code-breaking task in which users perform a variety of string-manipulation and editing functions on coded messages.

It allows the systematic evaluation of notational, semantic and syntactic variables. Among the results to be extensively reported elsewhere is that if there is a common argument in a set of commands, each of which takes two arguments, then the common argument must come first for greatest ease of use. Consistency of argument order is not enough: when the common argument consistently comes second no advantage is obtained relative to inconsistent ordering of arguments [6].

Comment 22The 2-argument example is persuasive on the face of it; but is it a ‘principle’ (see Comment 10) and might it appear in the ‘output to designers’ (Figure 1 and Comment 15(4))? If so, how is its domain independence established? This point raises again the issue of generalisation – see also Comment 17.

Conceptual Tools

Since we conceive the problem as a cognitive one, the tools are from the cognitive sciences.

Comment 23The claim is in no way controversial. However, it raises the question of whether the interplay between these cognitive tools and the working hypotheses (see Figure 1) also contribute to Cognitive Science (that is, Psychology)? See also Comment 15(3). Such a contribution would be in addition to the ‘output to designers’ of Figure 1.

Also we define the problem as one with those users who would be considered intellectually and motivationally qualified by any normal standards. Thus we do not admit as a potential solution that of finding “better” personnel, or simply paying them more, even if such a solution were practicable.

Comment 24If ‘design problem’ replaced ‘user problem’ (see also Comment 18), then better personnel and/or better pay might indeed contribute to the design (solution) of the design problem. The two types of problem, that is, design problem and user problem need to be related and grounded in the Framework. The latter, for example, might be conceptualised as a sub-set of the former. Eitherway, additional conceptualisation of the Framework is required. See also Comment 18.

The cognitive incompatibility we describe is qualitative not quantitative and the mismatch we are looking for is one between the user’s concept of the system structure and the real structure: between the way the data base is organised in the machine and the way it is organised in the head of the user: the way in which system details are usually encountered by the user and his preferred mode of learning.

The interaction of human and computer in a problem-solving environment is a complex matter and we cannot find sufficient theory in the psychological literature to support our intuitive needs. He have found it necessary to produce our own theories, drawing mainly on the spirit rather than the substance of established work.

Comment 25It sounds like our ‘own’ theories are indeed psychological theories (or would be if constructed). See also Comments 21 and 23.

Further than this, it is apparent that the problem is too complex for us to be able to use a single theoretical representation.

Comment 26Decomposition (as in multiple models) is a well-tried and trusted solution to complexity. However, re-integration will be necessary at some stage and for some purpose. Understanding (Psychology) and design of artefacts (HCI) would be two such (different) purposes. They need to be distinguished. See also Comment 15(5).

The model should not only be appropriate for design, it should also give a means of characterising errors – so as to understand their origins and enable corrective measures to be taken.

Comment 27What characterises a ‘model appropriate for design’? (see also Comment 15(4) and(5)). Design would have to be conceptualised for this purpose. Features might be derived from field studies of designer practice (see Figure 1); but a conceptualisation would not be ‘given’; but would have to be constructed (in the manner of the models). This construction would be a non-trivial undertaking. But how else could models be assured to be fit-for-(design)purpose? See also Comment 14).

Take the following protocol.

The user is asked to find the average age of entries in the block called PEOPLE.

“I’ll have a go and see what happens” types: *T <-AVG(AGE,PEOPlE)

machine response: AGE – UNSET BLOCK

“Yes, wrong, we have an unset block. So it’s reading AGE as a block, so if we try AGE and PEOPLE the other way round maybe that’ll work.”

This is very easy to diagnose and correct. The natural language way of talking about the target of the operation is mapped straight into the argument order. The cure would be to reverse the argument order for the function AVG to make it compatible.

Comment 28Natural language here is used both to diagnose ‘user problems’ and to propose solutions to those problems. Natural language, however, does not appear in the paper as a model, as such. Its extensive nature in psychology/linguistics would prohibit such inclusion. Further, there are many theories of natural language and no agreement as to their state of validation (or rejection). However, the model appears as a block in the BIM (see Figure 2). The model/representation, of course, might be intuitive, in the form and practice of lay-language, which we all possess. However, such intuitions would also be available to software engineers and would not distinguish systematic from non-systematic principles ( see Comment 10). The issue would need to be addressed in any re-publication of the ’79 paper.

The next protocol is more obscure. The task is the same as in the preceding one.

“We can ask it (the computer) to bring to the terminal the average value of this attribute.”

types: *T -AVG( AGE)

machine response: AVG(AGE) – ILLEGAL NAME

“Ar.d it’s still illegal. .. ( … ) I’ve got to specify the block as well as the attribute name.”

Well of course you have to specify the block. How else is the machine going to know what you’re talking about? A very natural I.M. response. How can we be responsible for feeble memories like this.

However, a more careful diagnosis reveals that the block PEOPLE is the topic of the ‘conversation’ in any case.

Comment 29Is ‘topic of conversation’, as used here an intuition, derived from lay-language or a sub-set of some natural language theory, derived form Psychology/Linguistics? This is a good example of the issue raised by Comment 28. The same question could be asked of the use of ‘natural language conventions’, which follows next.

The block has just been used and the natural language conventions are quite clear on the point.

We have similar evidence for the importance of human-machine discourse structures from the experiment using the code-breaking task described above. Command strings seem to be more ‘cognitively compatible’ when the subject of discourse (the common argument) is placed before the variable argument. This is perhaps analogous to the predisposition in sentence expression for stating information which is known or assumed before information which is new [7]. We are currently investigating this influence of natural language on command string compatibility in more detail.

Comment 30These natural language interpretations and the associated argumentation remain both attractive and plausible. However, command languages in general (with the exception of programmers) have fallen out of favour. Given the concept of the domain of application/tasks and the requirements of the Goal Structure Model, some addition to the natural language model would likely be required for any re-publication of the ’79 paper. Some relevance-related, plan-based speech act theory might commend itself in this case.

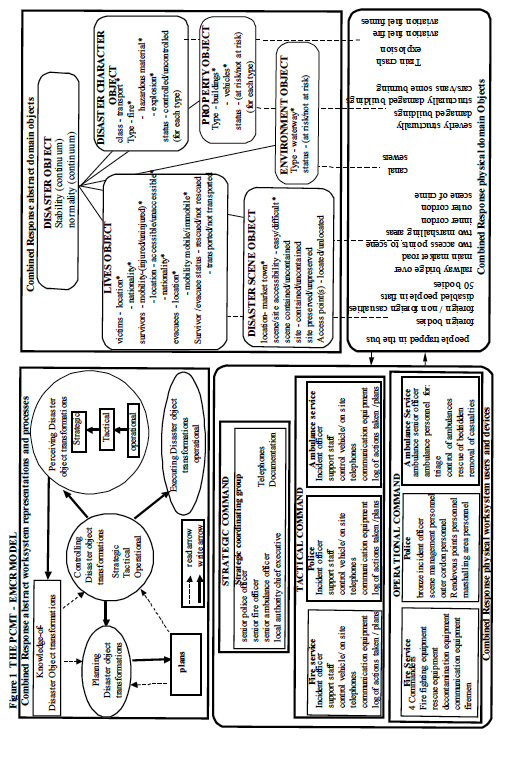

The Block Interaction Model

Comment 31The BIM remains a very interesting and challenging model and was (and remains) ahead of its time. For example, the very inclusion of the concept of domain (as a hospital; jobs in an employment agency etc); but, in addition, the associated representations of the user, the computer and the workbase. Thirty-four years later, HCI researchers are still ‘trying to pick the bits/blocks out of that’ in complex domains such as air traffic and emergency services management. Further development of the BIM in the form of more completely modeled exemplars would be required by any republished paper.

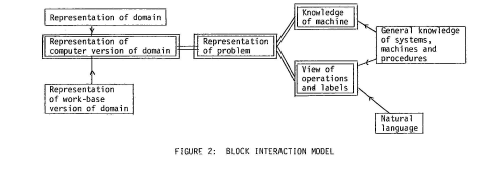

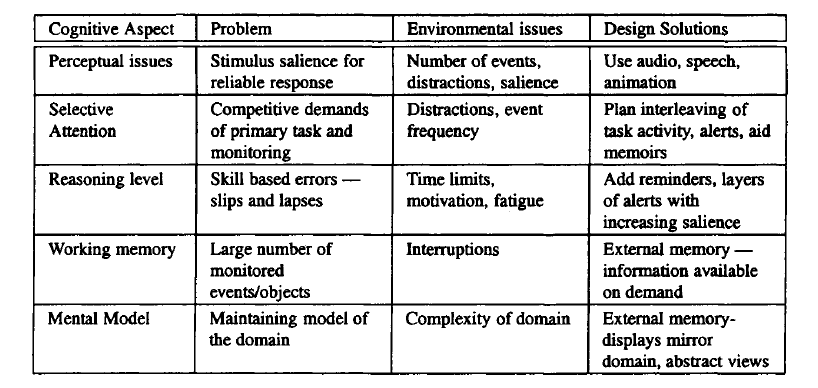

Systematic evidence from empirical studies, together with experience of our own, has led us to develop a conceptual analysis of the information in the head of the user (see figure 2). Our aim with one form of analysis is to identify as many separable kinds of knowledge as possible and chart their actual or potential interactions with one another. Our convention here is to use a block diagram with arrows indicating potential forms of interference. This diagram enables us to classify and thus group examples of interference so that they could be counteracted in a coordinated fashion rather than piecemeal. It also enables us to establish a framework within which to recognise the origin of problems which we haven’t seen before. Figure 2 is a simplified form of this model. The blocks with double boundaries, connected by double lines, indicate the blocks of information used by the ideal user. The other lines indicate prime classes of interference. The terminology we have used is fairly straightforward: Domain – the range of the specific application of a system. This could be a hospital, a city’s buildings, a set of knowledge such as jobs in ~n employment agency. Objects – the elements in the particular data base. They could be a relational table, patients’ records. I Representation of domain I Representa ti on of work-base version of domain domain Representation of problem Operations – the computer routines which manipulates the objects. Labels – the letter sequences which activate operators which, together with arguments and syntax, constitute the commands. Work base – in general, people using computer systems for problem solving have had experience of working in a non-computerised work environment either preceding the computerisation or at least in parallel with the computer system. The representation of this experience we call the work-base version. There will be overlap between this and the users representation of the computer’s version of the domain; but there will be differences as well, and these differences we would count as potential sources of interference. There may be differences in ·the underlying structure of the data in the two cases, for example, and will certainly be differences in the objects used. Thus a user found to be indulging in complex checking procedures after using the command FILE turned out to be perplexed that the material filed was still present on the screen. With pieces of paper, things which are filed actually go there rather than being copied. Here are some examples of interference from one of our empirical studies [4]:

Interference on the syntax from other languages. Subject inserts necessary blanks to keep the strings a fixed length.

“Now that’s Matthewson, that’s 4,7, 10 letters, so I want 4 blanks”

types: A+<:S:NAME = ‘MATTHEWSON ‘:>PEOPLE

Generalised interference

“Having learned how reasonably well to manipulate one system, I was presented with a totally different thing which takes months to learn again.”

Interference of other machine characteristics on machine view

“I’m thinking that the bottom line is the line I’m actually going to input. So I couldn’t understand why it wasn’t lit up at the bottom there, because when you’re doing it on (another system) it’s always the bottom line.”

Comment 32These examples do not do justice to the BIM – see Comment 31. More complete and complex illustrations are required.

The B.I.M. can be used in two ways. We have illustrated its utility in pinpointing the kinds of interference which can occur from inappropriate kinds of information. We could look at the interactions in just the opposite way and seek ways of maximising the benefits of overlap. This is, of course, the essence of ‘cognitive compatibility’ which we have already mentioned. Trivially, the closer the computer version of the domain maps onto the user’s own version of the domain the better. What is less obviou~ is that any deviations should be systematic where possible.

Comment 33In complex domains (see Comment 31), the user’s own model is almost always implicit. Modeling that representation is itself non-trivial. A re-published paper would have to make at least a good stab at it.

In the same way, it is pointless to design half the commands so that they are compatible with the natural language equivalents and use this as a training point if the other half, for no clear reason, deviate from the principle. If there are deviations then they should form a natural sub-class or the compatibility of the other commands will be wasted.

Information Structures

In the block interaction model we leave the blocks ill-defined as far as their content is concerned. Note that we have used individual examples for user protocols as well as general principles in justifying and expanding upon the distinctions we find necessary. What we fail to do in the B. I .M. is to characterise the sum of knowledge which an individual user carries around with him or brings to bear upon the interaction. We have a clear idea of cognitive compatibility at the level of an individual. If this idea is to pay then these structures must be more detailed.

There is no single way of talking about information structures. At one extreme there is the picture of the user’s knowledge as it apparently reveals itself in the interaction; the view, as it were, that the terminal has of its interlocutor. From this point of view the motivation for any key press is irrelevant. This is clearly a gross oversimplification.

The next stage can be achieved by means of a protocol. In it we would wish to separate out those actions which spring from the users concept of the machine and those actions which were a result of him being forced to do something to keep the interaction going. This we call ‘heuristic behaviour’. This can take the form of guessing that the piece of information which is missing will be consistent with some other system or machine. “If in doubt, assume that it is Fortran” would be a good example of this. The user can also attempt to generalise from aspects of the current system he knows about. One example from our study was where the machine apparently failed to provide what the user expected. In fact it had but the information was not what he had expected. The system was ready for another command but the user thought it was in some kind of a pending state, waiting with the information he wanted. In certain other stages – in particular where a command has produced a result which fills up the screen – he had to press the ENTER key – in this case to clear the screen. The user then over-generalised from this to the new situation and pressed the ENTER key again, remarking

“Try pressing ENTER again and see what happens.”

We would not want to count the user’s behaviour in this sequence as representing his knowledge of the system – either correct knowledge or incorrect knowledge. He had to do something and couldn’t think of anything else. When the heuristic behaviour is eliminated we are left with a set of information relevant to the interaction. With respect to the full, ideal set of such information, this will be deficient with respect to the points, at which the user had to trust to heuristic behaviour.

Comment 34The concept of ‘heuristic behaviour’ has never received the attention that it deserves in HCI research, although it must be recognised that much user interactive behaviour is of this kind. The proliferation of new interactive technologies (see Comment 3) is likely to increase this type of behviour by users attempting to generalise across technologies. A re-published paper would have better to relate the dimension of heuristic to that of correctness both with respect to user knowledge and user behaviour.

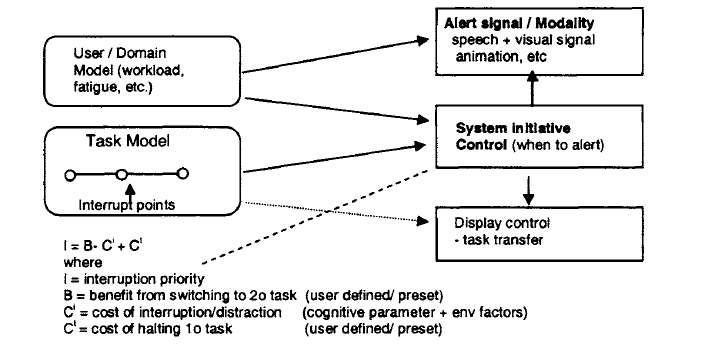

Note that it will also contain incorrect information as well as correct information; all of it would be categorised by the user as what he knew, if not all with complete confidence, certainly with more confidence than his heuristic behaviour. The thing which is missing from B.I.M. and I.S. is any notion of the dynamics of the interaction. We find we need three additional notations at the moment to do this. One of these describes the planning activity of the user, one charts the changes in state of user and machine and one looks at the general cognitive processes which are mobilised.

Comment 35The list of models required, in addition to the B.I.M. and the I.S. is comprehensive – planning, user-machine state changes, and cognitive processes. However, it might be argued that yet another model is required – one which maps the changes of the domain as a function of the user-computer interactive behaviours. The domain can be modeled as object-attribute-state (or value) changes, resulting from user-computer behaviours, supported respectively by user-computer structures. Such models currently exist and could be exploited by any re-published paper.

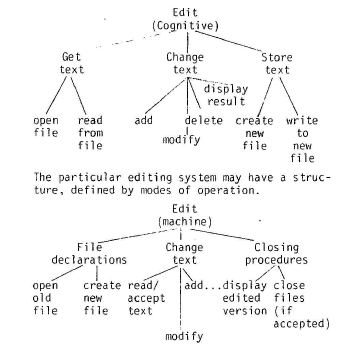

Goal Structure Model

The user does some preparatory work before he presses a key. He must formulate some kind of plan, however rudimentary. This plan can be represented, at least partially, as a hierarchical organisation. At the top might be goals such as “Solve problem p” and at the bottom “Get the computer to display Table T”. The Goal Structure model will show the relationships among the goals.

Comment 36The G.S.M. is a requirement for designing human-computer interactions. However, it needs to be related in turn to the domain model (see Comments 31, 32 and 33). In the example, the document in the G.S.M. is transformed by the interactive user-computer behaviours from ‘unedited’ to ‘edited’. Any hierarchy in the G.S.M. must take account of any other type of hierarchy, for example, ‘natural’, represented in the domain model (see also Comment 35). The whole issue of so-called situated plans a la Suchman would have to be addressed and seriously re-assessed (see also Comment 37).

This can be compared with the way of structuring the task imposed by the computer. For example, a user’s concept of editing might lead to the goal structure:

Comment 37

HCI research has never recovered from loosing the baby with the bath-water, following Suchman’s proposals concerning so-called ‘situated actions’. Using the G.S.M, a republished paper could bring some much needed order to the concepts of planning. Even the simple examples provided here make clear that such ordering is possible.

Two problems would arise here. Firstly the new file has to be opened at an ‘unnatural’ place. Secondly the acceptance of the edited text changes from being a part of the editing process to being a part of the filing process.

The goal structure model, then, gives us a way of describing such structural aspects of the user’s performance and the machines requirements. Note that such goals might be created in advance or at the time a node is evaluated. Thus the relationship of the GSM to real time is not simple.

The technique for determining the goal structure may be as simple as asking the user “What are you trying to do right now and why?” This,may be sufficient to reveal procedures which are inappropriate for the program being used.

Comment 38Complex domain models, for example, of air traffic management and control would require more sophisticated elicitation procedures than simple user questioning. User knowledge, supporting highly skilled and complex tasks is notoriously difficult to pin down, given its implicit nature. So-called ‘domain experts’ would be a possible substitute; but that approach raises problems of its own (for example, when experts disagree). A re-published paper would at least have to recognise this problem.

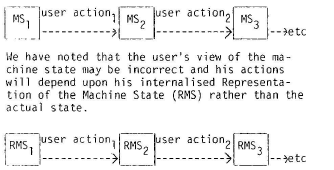

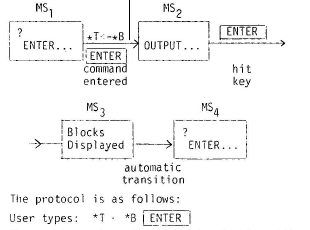

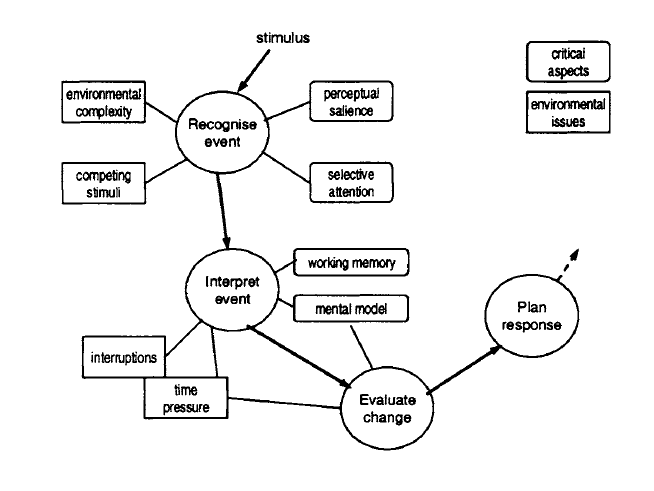

State Transition Model

In the course of an interaction with a system a number of changes take place in the state of the machine. At the same time the user’s perception of the machine state is changing. It will happen that the user misjudges the effect of one command and thereafter’ enters others which from an outside point of view seem almost random. Our point is, as before, that the interaction can only be understood from the point of view of the user.

Comment 39

The S.T.M. needs in turn to be related to the domain model (See Comments 31 and 35). These required linkings raise the whole issue of multiple-model re-integration (see also Comment 26).

This brings us to the third of the dynamic aspects of the interaction: the progress of the user as he learns about the system.

Comment 40As with the case of ‘heuristic behaviour’, HCI research has never treated seriously enough the issue of ‘user learning’. Most experiments record only initial engagement with an application or at least limited exposure. Observational studies sometimes do better. We are right to claim that users learn (and attempt to generalise). Designers, of course, are doing the same thing, which results in (at least) two moving targets. Given our emphasis on ‘cognitive mismatch’ and the associated concept of ‘interference’, we need to be able to address the issue of user learning in a convincing manner, at least for the purposes in hand.

Let us explore some ways of representing such changes. Take first of all the state of the computer. This change is a result of user actions and can thus be represented as a sequence of Machine States (M.S.) consequent on user action.

If the interaction is error free, changes in the representations would follow changes in the machine states in a homologous manner. Errors will occur if the actual machine state does not match its representation.

Comment 41At some stage and for some purpose, the S.T.S surely needs to be related to the G.S.M. (and or the domain model). Such a relationship would raise a number of issues, for example, ‘errors’ (see Comment 9) and the need to integrate multiple-models (see also Comments 26 and 39).

We will now look at errors made by a user of an interactive data enquiry system. We will see errors which reveal both the inadequate knowledge of the particular machine state or inadequate knowledge of the actions governing transitions between states. The relevant components of the machine are the information on the terminal display and the state of a flag shown at the bottom right hand corner of the display which ‘informs the user of some aspects of the machine state (ENTER … or OUTPUT … ). In addition there is a prompt, “?”, which indicates that the keyboard is free to be used, there is a key labelled ENTER. In the particular example the user wishes to list the blocks of data he has in his workspace. The required sequence of machine states and actions is:

The machine echoes the command and waits with OUTPUT flag showing.

User: “Nothing happening. We’ve got an OUTPUT there in the corner I don’ t know what that means.

The user had no knowledge of MS2: we can hypothesise his representation of the transition to be:

This is the result of an overgeneralisation. Commands are obeyed immediately if the result is short, unless the result is block data of any size. The point of this is that the data may otherwise wipe everything from the screen. With block data the controlling program has no lookahead to check the size and must itself simply demand the block, putting itself in the hands of some other controlling program. We see here then a case where the user needs to have some fairly detailed and otherwise irrelevant information about the workings of the system in order to make sense of (as opposed to learn by rote) a particular restriction.

The user was told how to proceed, types ENTER, and the list of blocks is displayed together with the next prompt. However, further difficulties arise because the list of blocks includes only one name and the user was expecting a longer listing. Consequently he misconstrues the state of the machine. (continuing from previous example)

User types ENTER

Machine replies with block list and prompt.

Flag set to ENTER …

“Ah, good, so we must have got it right then.

A question mark: (the prompt). It doesn’t give me a listing. Try pressing ENTER again and see what happens.”

User types ENTER

“No? Ah, I see. Is that one absolute block, is that the only blocks there are in the workspace?”

This interaction indicates that the user has derived a general rule for the interaction:

“If in doubt press ENTER”

After this the user realises that there was only one name in the list. Unfortunately his second press of the ENTER key has put the machine into Edit mode and the user thinks he is in command mode. As would be expected the results are strange.

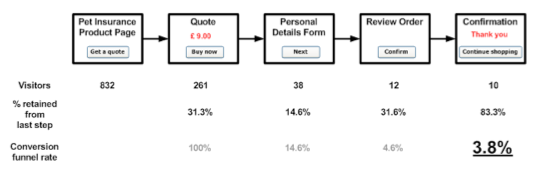

At this stage we can show the machine state transitions and the user’s representation together in a single diagram, figure 3.

This might not be elegant but it captures a lot of features of the interaction which might otherwise be missed.

Comment 42The S.T.M. includes ‘machine states’ and the user’s representation thereof. Differences between the two are likely to identify both errors and cognitive mismatches. However, the consequences – effective or ineffective interactions and domain transformations – are not represented; but need to be related to the G.S.M. ( and the domain model). This raises, yet again, the issue of the relations between multiple-models required in the design process (see Figure 1 and Comments 26 and 39).

The final model we use calls upon models currently available in cognitive psychology which deal with the dynamics of word recognition and production, language analysis and information storage and retrieval. The use of this model is too complex for us to attempt a summary here.

Comment 43Address of the I.P.M. is noticeable only by its intended absence. This may have been an appropriate move at the time. However, any re-published paper would have to take the matter further. In so doing, at least the following issues would need to be addressed:

1. The selection of appropriate Psychology/Language I.P.M.s, of which there are very many, all in different states of development and validation (or rejection). (Note Card et al’s synthesis and simplification of such a model in the form of the HIP – see Comment 15).

2. The relation of the I.P.M. to all other models (see Comments 26, 35, 41 and 42).

3. The need to tailor any I.P.M. to the particular domain of concern to any application, for example, air traffic management (see Comments 6 and 39).

4. The level of description of the I.P.M. See also 1. above.

5. The use of any I.P.M. by designers (see Figure 1).

6. The ‘guarantee’ that Psychology brings to such models in the case of their use in design and the nature of its validation.

Conclusion

We have stressed the shortcomings of what we have called the Industrial Model and have indicated that the new user will deviate considerably from this model. In its place we have suggested an alternative approach involving both empirical evaluations of system use and the systematic development of conceptual analyses appropriate to the domain of person-system interaction. There are, of course, aspects of the I.M. which we have no reason to disagree with, for example, the idea that the computer can beneficially transform the users view of the problems with which he is occupied. However, we would appreciate it if someone would take the trouble to support this point with clear documentation. So far as we can see it is simply asserted.

Comment 44In the 34 years, following publication of our original paper, numerous industry practitioners, trained in HCI models and methods, would claim to have produced ‘clear documentation’, showing that the ‘computer can beneficially transform the user’s view of the problems with which he is occupied’. This raises the whole (and vexed) question of how HCI has moved on since 1979, both in terms of the number and effectiveness of trained/educated HCI practitioners. HCI community progress, clear to everyone, needs to be contrasted with HCI discipline progress, unclear to some.

Finally we would like to stress that nothing we have said is meant to be a solution – other than the methods. We do not take sides for example, on the debate as to whether or not interactions should be in natural language – for we think the question itself is a gross oversimplification. What we do know is that natural language interferes with the interaction and that we need to understand the nature of this interference and to discover principled ways of avoiding it.

Comment 45Natural language understanding and interference smacks of science. Principled ways of avoiding interference smacks of engineering. What is the relationship between the two? What is the rationale for the relationship? What is the added-value to design (see also Comment 15).

And what we know above all is that the new user is most emphatically not made in the image of the designer.

Comment 46The original paper essentially conceptualises and illustrates the need for the proposed ‘Framework for HCI’. That was evil, sufficient unto the day thereof. However, what it lacks thirty-four years later is any exemplars, for example, following Kuhn’s requirement for knowledge development and validation. The exemplars would be needed for any re-publication of the paper and would require the complete, coherant and fit-for-purpose – operationalisation, test and generalisation of the Framework, as set out in Figure 1. A busy time for someone……

References

[1 ] du Boulay, B. and O’Shea, T. Seeing the works: a strategy of teaching interactive programming. Paper presented at Workshop on ‘Computing Skills and Adaptive Systems’, Liverpool, March 1978. [2] Hammond, N.V., Long, J.B. and Clark, l.A. Introducing the interactive computer at work: the users’ views. Paper presented at Workshop on ‘Computing Skills and Adaptive Systems’, Liverpool, March 1978. [3] Wilson. T. Choosing social factors which should determine telecommunications hardware design and implementation. Paper presented at Eighth International Symposium on Human Factors in Telecommunications, Cambridge, September 1977. [4] Documenting Human-computer Mismatch with the occasional interactive user. APU/IBM project report no. 3, MRC Applied Psychology Unit. Cambridge, September 1978. [5] Hammond, N.V. and Barnard, P.J. An interactive test vehicle for the investigation of man-computer interaction. Paper presented at BPS Mathematical and Statistical Section Meeting on ‘Laboratory Work Achievable only by Using a Computer’, London, September 1978. [6] An interactive test vehicle for the study of man-computer interaction. APU/IBM project report no. 1,MRC Applied Psychology Unit, Cambridge, September 1978. [7] Halliday, M.A.K. Notes on transitivity and theme in English. Part 1. Journal of Linguistics, 1967, 3, 199-244.

FIGURE 3: STATE TRANSITION EXAMPLE