Longing for service: Bringing the UCL Conception towards services research

Peter J. Wild

Institute for Manufacturing, University of Cambridge, 17 Charles Babbage Road, UK

John Long's Comment 1 on this Paper

Although I am sure that I have met Wild a couple of times at conferences, I really do not know him personally, although I am aware of his work and I have not had any extended discussions with him. It is with great interest, then, that I read his contribution to the Festschrift, in which he applies the Long and Dowell (1989) and Dowell and Long (1989) Conceptions to Services Research without having worked at the Ergonomics and HCI Unit at UCL. It is a very welcome example of HCI researchers building on each other’s work, an issue which I have raised a number of times in my comments on other Festschrift contributions. I am very pleased, in the comments, which follow, to try to contribute to Wild’s attempt to apply the Conceptions.

Abstract

There has been an increase in the relevance of and interest in services and services research. There is a acknowledgement that the emerging field of services science will need to draw on multiple disciplines and practices. There is a growing body of work from Human–Computer Interaction (HCI) researchers and practitioners that consider services, but there has been limited interaction between service researchers and HCI. We argue that HCI can provide two major elements of interest to service science: (1) the user centred mindset and techniques; and (2) concepts and frameworks applicable to understanding the nature of services. This second option is of major concern in this paper, where we consider Long’s work (undertaken with John Dowell) on a Conception for HCI. The conception stands as an important antecedent to our own work on a framework that: (a) relates the various strands of servicer research; and (b) can be used to provide high-level integrative models of service systems. Core concepts of the UCL Conception such as domain, task, and structures and behaviours partially help to relate systematically different streams of services research, and provide richer descriptions of them. However, if the UCL Conception is moved towards services additional issues and challenges arise. For example, the kinds of domain changes that are made in services differ; services exist in a wider environment; and that effectiveness judgements are dependent on values. We explore these issues and provide reflections on the status of HCI and Service Science.

1. Introduction

As well as becoming an ever more important part of local and global economies, services and service design are emerging, crossing, and in some cases redefining disciplinary boundaries. Papers have emerged in HCI venues that have explicitly examined services (e.g. Chen et al., 2009; Cyr et al., 2007; Magoulas and Chen, 2006; van Dijk et al., 2007). Service has emerged as a frequent metaphor for a range of computing applications, web based, pervasive and ubiquitous: here researchers and practitioners often talk of services instead of applications. This is in addition to a service metaphor in Service-Oriented Architectures (Luthria and Rabhi, 2009; Papazoglou and van den Heuvel, 2007), and the Software as a Service concept. The user, value, and worth centred ethos of HCI (e.g. Cockton, 2006; McCarthy and Wright, 2004), is making its way into service design approaches (e.g. Cottam and Leadbeater, 2004; Jones and Samalionis, 2008; Parker and Heapy, 2006; Reason et al., 2009).

Definitions of services stress the intangible, activity, and participatory nature of services (e.g. Hill, 1977; Lovelock, 1983; Lovelock and Gummesson, 2004; Rathmell, 1974; Shostack, 1977; Vargo and Lusch, 2004a). Hill defined services as ”some change is brought about in the condition of some person or good, with the agreement of the person concerned or economic unit owning the good (1977, p. 318).” This definition suggest that services are activities upon objects and artefacts, both natural (people, pets, gardens) and designed (cars, houses, computers) as well as concrete (e.g. bodies, equipment) and abstract (e.g. education, publishing, therapy).

Comment 2

The general discipline problem of HCI is: ‘humans and computers interacting to perform work effectively’ (Long and Dowell, 1989). Work is conceived as: ’any activity seeking effective performance’ (Long, 1996). Services, as ‘activities upon objects and artefacts’, thus have much in common with HCI, although the latter puts more emphasis on design, technology and effectiveness.

Hill is also keen to stress the role of exchange, and to distinguish between activities that can and cannot be solely performed by oneself, noting that ”if an individual grows his own vegetables or repairs his own car, he is engaged in the production of goods and services. On the other hand, if he runs a mile to keep fit, he is not engaged because he can neither buy nor sell the fitness he acquires, nor pay someone else to keep fit for him (1977, p. 317).” Hence services are potentially transferable activities performed by self or other to achieve a range of benefits (e.g. save money, sense of accomplishment). Some can be legally enforced onto an economic unit (e.g. tax, insurance, MOT), therefore implying a forced transfer.

Recently, the monikers service science and service systems have emerged from initiatives to support an interdisciplinary dialogue on services (IfM and IBM, 2008). Service systems have been defined as ”dynamic configurations of people, technologies, organisations and shared information that create and deliver value to customers, providers and other stakeholders (IfM and IBM, 2008, p. 1),” with service science being ”the study of service systems and of the co-creation of value within complex constellations of integrated resources (Vargo et al., 2008, p. 145).” There is much in common, both conceptually and empirically between HCI and service science.

Comment 3

Note that: ‘HCI’ and ‘Service Science’ does not necessarily mean the same as: ‘HCI Science’ and ‘Service Science’. The latter have ‘Science’ in common, whose discipline problem is understanding, expressed as the explanation and prediction of natural phenomena. The former may differ, for example, HCI as engineering, whose discipline problem is design for effectiveness, as diagnosis and prescription (Dowell and Long, 1989). The use of HCI to inform Service Science needs to be sensitive to such differences.

Including the goal to create robust and repeatable activities/experiences that are objectively and/or experientially successful; continuing issues in the speed of change in the phenomena they are studying; and the theory–practice gap. However, despite potential opportunities and overlaps, this is not a rebranding of HCI by another name.

Hence, as an emerging area, service science could benefit from HCI’s experience, specifically: (1) the user centred mindset and techniques; and (2) concepts and frameworks for understanding the nature of services.

Comment 4

Some researchers conceive of HCI as a science , or as an applied science (Carroll, 2010; Dix, 2010). Associated concepts and frameworks would indeed support understanding of HCI and might support understanding of Service Science too. However, concepts and frameworks for HCI as engineering (‘design for effective performance’) are unlikely to support Service Science (but might well support Service Engineering).

It is the second area that is the major concern of this paper, although we return to the issue of user centred mindset in the paper’s conclusions. HCI has both produced and adopted rich theoretical tooling in its efforts to understand Interaction with and through IT artefacts. Whilst seemingly diverse with ontological and epistemological differences they share a common concern to represent the structure of individual and collective activities in a manner that informs the design of new IT artefacts and activities. This key role of activity representations in HCI is often background in favour of a view centred on the technology being developed. However, it is the activity and latterly the experience of that activity that are being supported/enabled by technology that is one of HCI’s key methodological outputs.

One of this paper’s concerns is with the UCL Conception (Dowell and Long, 1989) one of the conceptual frameworks put forwards for HCI. The conception offers a set of abstractions for HCI, and has guided several streams of work within UCL and elsewhere, Diaper noted that it is ”perhaps the most sophisticated framework for the discipline of HCI (2004, p. 15)”, with its emphasis on effectiveness/ performance being a key part of Diaper’s reasoning behind this assertion. Several of the concepts from Dowell and Long’s work have informed our own framework developed to relate different strands of service research together (Wild et al., 2009a,b) and thus Dowell and Long’s work acts as an important antecedent to our work in services.

Comment 5

Wild’s treatment of the Dowell and Long (1989) Conception for an engineering discipline of HCI, as an ‘important antecedent’, implies at least some consensus. This is precisely the pre-requisite for HCI researchers to build on each other’s work to develop more, and more reliable, HCI knowledge and so a mature discipline. See also Dix Comments 3, 4, 10 and 13.

1.1. Paper overview

With this context in mind, Section 2 is concerned with providing an outline of relevant aspects of services research by covering service definitions. In addition, the section covers our understanding of the UCL Conception (Section 2.2) and examines core concepts from the UCL Conception to different strands of services research (Section 2.2.1). Section 3 first covers the Activity Based Framework for Services (ABFS), our own framework, of which the UCL Conception is an important antecedent. We then consider a number of issues that prevented us from applying Dowell and Long’s concepts as-is to represent and relate strands of services research and model service systems. Finally, we provide a number of illustrative examples of the use of the ABFS for modelling service systems (Section 3.2). Section 4 summarises and concludes the paper, discussed whether services are within the remit of HCI; and if so what they may face when interacting with Service Marketing and Service Operations, two areas that have had a focus on services and have varying claims to user representation and/or involvement.

2. Relevant literature

2.1. Services: a necessarily short and biased overview

It is difficult to trace the growth in importance of services because of differences in the ways that they are defined and reported over time and between countries (Hill, 1977, 1999). Economic downturns aside, a figure that is often cited is that services account for 70–80% of Western economic activity (IfM and IBM, 2008; Parker and Heapy, 2006). During recent years, a number of monikers have been put forwards for a shift to service as the focus of economic and intellectual activity.1 Focus on listing these terms alone will distract the reader and this overview concerns service definitions. The aim is to provide an overview of the different disciplinary perspectives on services.

To even the casual observer the term services embraces a number of different forms and contexts; from intangible services undertaken on abstract objects (such as information and knowledge); via services on people (such as medicine and education); through to maintenance procedures on hardware. In addition, many contexts include all these types of services. Large scale availability contracts for complex products can involve information gathering, forecasting, education and training, the supply of additional tools (e.g. IT artefacts and Support Equipment) in addition to the maintenance of the actual product (Goedkoop et al., 1999; Terry et al., 2007).

Work within Economics and Services Marketing has attempted to provide generic characterisations that show the commonality between these different types of services. The earliest work on service definition was in Economics. Hill (1999) provides a good review of thought on services in Economics, he covers the travails that Economists such as Smith, Say, Senior, Mill, Marshall and Hicks went through in trying to define services. This early work characterised services as different to material goods, and involving different forms of production and delivery. In addition, ownership rights could be established over goods and because of their material nature they can be stored and inventoried, as well as having their life extended through maintenance or remanufacture. In contrast, services were deemed as intangible, variable in quality, and could not be owned or stored. During the 1970s and 1980s, Services Marketing emerged as a discipline in its own right to study flows of services between producers and consumers, working from the view that products and services were different enough to warrant an approach different to mainstream marketing. Two literature reviews (Fisk et al., 1993; Zeithaml et al., 1985) helped to solidify four characteristics as the core distinctions between products and services, namely Intangibility, Heterogeneity, Inseparability, and Perishability (IHIP). Vargo and Lusch summarised these four features as ”Intangibility—lacking the palpable or tactile quality of goods. Heterogeneity—the relative inability to standardize the output of services in comparison to goods. Inseparability of production and consumption—the simultaneous nature of service production and consumption compared with the sequential nature of production, purchase, and consumption that characterizes physical products. Perishability—the relative inability to inventory services as compared to goods (Vargo and Lusch, 2004b, p. 326)”. Lovelock and Gummesson (2004) characterised the IHIP qualities as forming a ‘textbook consensus’ on how services Marketing represented itself to its own students, and to other disciplines. The IHIP characteristics partially enabled services marketing to ‘break away’ from mainstream product-oriented marketing and fuelled a number of research streams, including representation and evaluation of services. Several papers (Lovelock and Gummesson, 2004; Vargo and Lusch, 2004b; Wyckham et al., 1975) question the IHIP characteristics as a foundation for Services Marketing. Some researchers suggest refinements (Hill, 1999; Wild et al., 2007). Others suggest alternative paradigms/logics such as Nonownership (Lovelock and Gummesson, 2004) or the Service-Dominant logic (Vargo and Lusch, 2004a).

We return to the Service-Dominant logic later, but one of the most useful refinements of the core IHIP ideas come from Lovelock (1983) and Hill (1999). Hill (1999) argued for a retention of a distinction between services and goods. However, the dyad needed refining into a triad, with Intangible goods (e.g. books, music compositions, films, processes, plans, blueprints and computer programs) being added. Hill recognised that intangible goods need a manifestation mechanism. Traditionally this has been via physical media, but increasingly the medium is virtual (albeit one which is still reliant on computer memory structures). Lovelock (1983) provided a number of useful characterisations of services, one of which was that they can be tangible or intangible, we gain a fourfold division, tangible and intangible goods; and tangible and intangible service activities (Wild et al., 2009a).

Comment 6

This division between tangible and intangible goods and services would seem to correspond to Dowell and Long’s (1989) use of the physical and abstract. The latter division would apply both to goods and to services.

A marketable offering will in general provide a range of such tangible and intangible elements (Shostack, 1977), it is also possible to refine each of the four entities and the relationships between them, as well as relate them to other product and service attributes (Wild et al., 2007).

Outside of economics and marketing, there has been strong and growing interest in services with research undertaken in disciplines such as engineering, manufacturing, computing, and design. A number of approaches have emerged that explicitly tackle the design of services, or the co-design of product(s) and services. Prominent approaches include Product-Service systems (PSS, Goedkoop et al., 1999; Tukker, 2004) and Functional Products (FP, Alonso-Rasgado et al., 2004). PSS has often been associated with the sustainability agenda, with a key idea being the substitution of a general service function (e.g. transportation) for a specific product (e.g. personally owned cars) to reduce the ecological impact of high-levels of under-utilised or inefficient products. Goedkoop et al. (1999) defined a PSS as ”a marketable set of products and services capable of jointly fulfilling a user’s need. The PS system is provided by either a single company or by an alliance of companies. It can enclose products (or just one) plus additional services. It can enclose a service plus an additional product. And product and service can be equally important for the function fulfilment (1999, p. 18).” PSS can be seen as designing the PSS Service-In (Wild et al., 2009b), in contrast the Functional Product (FP, Alonso-Rasgado et al., 2004) and Industrial Product-Service Systems (IPS2, Aurich et al., 2007) approaches work from the Product-Out (Wild et al., 2009b), offering services that can feasibly be offered around a product, or product family. The latter approaches tend to be explicitly motivated to seek additional profit and revenue opportunities from service (Wild et al., 2009b) along with a general shift from ‘product plus parts’ to providing overall product availability (Terry et al., 2007). The design foci for PSS/FP/IPSS can cover the product, its support artefacts, related activities and necessary social and organisational structures (Goedkoop et al., 1999; Wild et al., 2009b). There can be an over emphasis on technical issues in proposed PSS/FP/IPS2 design approaches put forwards (see Roy and Shehab, 2009), however because of the influence of marketing there can be elements of customer representation and interaction in the proposed design processes (e.g. Alonso-Rasgado et al., 2004). Ironically, despite the professed interest in the environmental issues work in PSS has provided little in depth theorisation about ecological and environmental factors (c.f. Costanza et al., 1997; Hawken et al., 1999).

In computing, a service metaphor – rather than mathematical or physical ones (i.e. functions or modules) – has driven developments in Service-Oriented Architectures (Luthria and Rabhi, 2009; Papazoglou and van den Heuvel, 2007). The risk is that SOA researchers see such architectures as being solely concerned with application-to-application interaction (Kounkou et al., 2008), ignoring the fact that these applications are carrying out activities for people. Some work links business process models and SOAs; but service as an analogy for software modularity has been be argued by Kounkou et al. (2008) to miss deeper user centred abstractions based on the needs and values of various stakeholders. Despite a variety of perspectives within the process modelling communities (Melão and Pidd, 2000) there is sparse evidence that a user-centred perspective is being taken in such process modelling efforts. Work in progress is moving towards remedying by integrating HCI knowledge into the SOA development lifecycle practices (Kounkou et al., 2008); or supporting composition of services by non technical users (Namoune et al., 2009). In addition to work in SOA there is concept of Software as a Service (SaaS) (Bennett et al., 2000), whereby an IT artefact is used, but transfer of ownership does not take place (Bennett et al., 2000). These concepts have interacted with the SOA and Cloud Computing communities who have largely focussed on exploring architectures. Again we see a lack of input from HCI knowledge and practice and a corresponding lukewarm reception (Pring and Lo, 2009).

There are a number of service design approaches from the design community (e.g. Cottam and Leadbeater, 2004; Jégou and Manzini, 2008; Nelson, 2002; Parker and Heapy, 2006). In many ways design practitioners are the vanguard of the interaction between HCI concepts and approaches and the design of services (see Jones and Samalionis, 2008; Parker and Heapy, 2006; Reason et al., 2009). Parker and Heapy assert that the prevailing mindset for services is that they are ”seen as a commodity, rather than something deeper, a form of human interaction (p. 8)”. They utilise a number of techniques from HCI, but there is little linkage of their work to explanatory accounts of design processes. Without such explanations it is unclear how much service design success is due to the craft skill of the designers involved and how much is down to the methods and ethos employed. Nelson (2002) a designer and systems theorist discussed various service metaphors (Lip, Room, Social/Public Military/Protective) along with their strengths and weaknesses. Nelson’s concern is to use design processes to enable an approach that combines the best of these service metaphors whilst avoiding their downsides. The goal of such ”full service is adequate essential and significant to the well-being of the clients and stakeholders” (Nelson, 2002, p. 46).

Comment 7

Wild’s reference to HCI ‘explanatory accounts of design processes’ is consistent with his earlier references to HCI and Service Science (according to one meaning at least) – see Comment 3. Like Dix and Carroll, Wild needs to make explicit, and so how to validate, the relation between Science, as understanding and Applied Science/Engineering as design (see also Dix Comment 1).

Such full service involves: a relationship of maturity and complexity; conspiracy of empathy and creative struggle; a contract between equals where all parties have a voice; provides for the common good; and evokes the uncommon good (Nelson, 2002, p. 416). Nelson has offered little in the way of methodological support, but related work by Cockton in HCI could assist the exploration of service values (e.g. Cockton, 2006).

Nelson’s consideration of values driving design practice and those that should be enabled by services leads us to consider a position has emerged in Service Marketing, the Service-Dominant Logic (SDL Vargo and Lusch, 2004a). Here all marketable offerings, whether classed as goods or services, are considered to provide an element of service. Building on Hill’s (1977) definition presented previously, service is defined as ”the application of specialized competences (knowledge and skills), through deeds, processes, and performances for the benefit of another entity or the entity itself (Vargo and Lusch, 2004a).

Comment 8

This definition of Service is not inconsistent with the Conception of the HCI discipline, as knowledge supporting practice to solve the design problem of humans interacting with computers to perform effective work, here desired changes to goals and services.

Products – whether physical or software – along with services, exist to provide service. When we buy a product such as car or bike, we gain both the product and the benefits of skills of those who produce and supply it to us. When we use a service such as a bus, we gain the benefit from the journey, and the skills and capabilities of the bus company, but also temporary use/benefit of their products. The distinctions between products and services – it is argued – become irrelevant (Vargo and Lusch, 2004b), they are both approaches to providing something the recipient cannot or will not do themselves. Stauss (2005) is one of the most strident critics of this position noting that the fact that physical goods and services both bring about value does not necessarily imply that both are also produced in the same way or they bring about the same kind of value in the same way.

A key part of the SDL is with value-in-exchange rather than value-in-use.

Comment 9

HCI seems to have little to say currently, as concerns the difference between ‘value-in-exchange’ and ‘value-in-use’. However, there is no reason to doubt that the difference, providing that it is specifiable, can be reflected in the concept of effectiveness over time, rather than at a single point in time.

Essentially value-in-exchange views the value or benefit of a product or services as not being realised until the product is used or services are used/enacted. In contrast Value-in-Exchange is the value actually received from use or a product or receipt of a service. Whilst the two concepts have been around since Aristotle, it is claimed the predominant mindset in society has been valuein-exchange (Ramirez, 1999; Vargo and Lusch, 2004a). However, beyond the distinction between value-exchange and value-in-use there remains considerable ambiguity within Vargo and Lusch’s literature on the very meaning of value. Their original paper (Vargo and Lusch, 2004a) uses the term value over a 100 times without definition.2 Since Vargo and Lusch’s earlier presentations a 10th Foundation Principle for the SDL has been added that states ”value is always uniquely and phenomenological determined by the beneficiary (Vargo and Lusch, 2008).” This appeal to the subjective and intersubjective nature of value is predated by work in Economics such as that of the ‘Austrian’ school.3 Finally, the SDL builds on two types of resources. Operand resources: are physical resources upon which an operation or act is performed to produce an effect. Operant resources: are employed to act upon operand resources, and concern issues such as knowledge and skills, they are ”likely to be dynamic and infinite and not static … they enable humans to multiply the value of natural resources and to create additional operant resources (Vargo and Lusch, 2004a, p. 3)”.

HCI may be a discipline well placed to explore the implications of this conceptualisation of product and service use. Its focus on user participation in design processes could be extended to provide analysis and guidance throughout the life of artefacts.

Comment 10

Wild’s claim here is consistent with the proposal made in Comment 8.

There is relevant work on issues such as aesthetics (Hassenzahl et al., 2000; Lindgaard and Whitfield, 2004), experience (McCarthy and Wright, 2004; Sengers, 2003), emotion (Harper et al., 2008), and value(s) (Cockton, 2007; Harper et al., 2008) to draw upon in turning the SDL into a methodologically tractable approach.

Comment 11

There is, indeed, HCI research into aesthetics, experience, emotion, and values, as claimed by Wild. However, the work has yet to be turned into a ‘methodologically tractable approach’ for HCI, never mind about product and services over time. However, the research remains available for application by SDL (Service Dominant Logic), as stated by Wild.

However, HCI has had to date limited interest in post-delivery usage. Data can be collected as a basis for the redesign of the next version, but the founding methodological principle of early and continuous focus on users and their tasks fades once an artefact is delivered (Wild and Macredie, 2000). To assume that an IT artefact assessed as effective at launch will remain so throughout its lifetime seems naive. Therefore, whilst in principle HCI is pursuing Value-in-Use its actual performance in evaluating it is below its potential and stated ethos.

Finally, our concern turns to Service representation. Shostack (1984) developed and introduced Service Blueprinting, which has become the primary representation technique for services. It originally included: the temporal order of customer and service provider actions; the timings on these actions; tangibles in support of the activities, and the line of the visibility (i.e. actions that the Service recipients can and cannot see in their exchanges with a service provider). Later publications have refined the work (e.g. Bitner et al., 2007; Fließ and Kleinaltenkamp, 2004), including a spiral lifecycle model. Service Blueprinting is described in a numerous services marketing textbooks; and has been elaborated in papers by Fließ and Kleinaltenkamp (2004) and Bitner et al. (2007). There has been an increase in the number of lines of interaction. Fließ and Kleinaltenkamp (2004) list five: (1) interaction: separates customer and supplier interaction; (2) visibility: what customers see; (3) internal interaction: front and back office capabilities; (4) order penetration: activities that are independent and dependent on customers; and (5) implementation: separates planning, management, control, and support activities. Of all the work in Services Marketing Service Blueprinting has been one of the approaches used most ‘outside’ of the discipline, for example, in research and practice in Functional Products (Alonso-Rasgado et al., 2004), Design (Parker and Heapy, 2006), and Product-Service Systems (Morelli, 2006). There is however, no known conceptual or empirical comparison of Service Blueprinting with other methods for mapping processes or analysis of tasks (e.g. CTT, IDEF, BMPN, UML). There are no known studies on the efficacy of Service Blueprinting or its perceived or actual usability by end users of a service. Neither is it clear what depth of user representation and involvement is needed in the approach. Is it simply an attempt for the service designers to represent the service from what they think is the user’s perspective, or does Service Blueprinting demonstrate a deeper philosophical commitment (see Bekker and Long, 2000) to user involvement and representation?

We caution against viewing services research as coherent, despite the large amounts of rhetoric, discussion, support from industrial sources, and recent research funding in the area. Our own modest efforts in this area have shown relationships between activity concepts embodied in approaches such as tasks analysis, process modelling, and the UCL Conception, and a range of research strands in services (Wild et al., 2009a,b), but this remains a long way from a unified and accepted paradigm. What this work recognised was that across the varieties of services research in existence – some of which we have covered here – there are a variety of recurring concerns, but they had not been brought together within one framework. These concerns cover: value and values; the relationship between domains and activities; the relationship between products and domains, and service activities and domains; the relationship between service provider and service recipient and the kind of overt and covert resources that are used in service performance. In turn, concepts within service approaches relate to activity oriented approaches such as Task Analysis, Process and Domain modelling. Dowell and Long’s work was an important antecedent our work, and this paper provide an opportunity to reflect on both its influence and why it could not be used as-is to represent and relate strands of services research and model service systems.

2.2. The UCL Conception

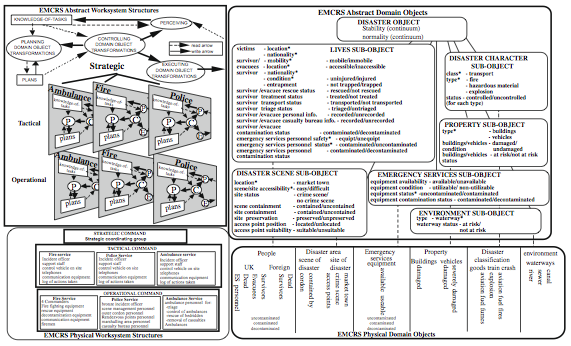

One of Long’s many contributions to HCI is his work with John Dowell on what we label the UCL Conception (see Dowell and Long, 1989, 1998). The UCL (University College London) Conception’s utility has been demonstrated in a number of contexts: it has been used to compare task analysis; scope HCI education syllabi; to help understand change occurrences; modelling task planning, control, perception and execution; emergency management; air traffic control; and cooperative work.

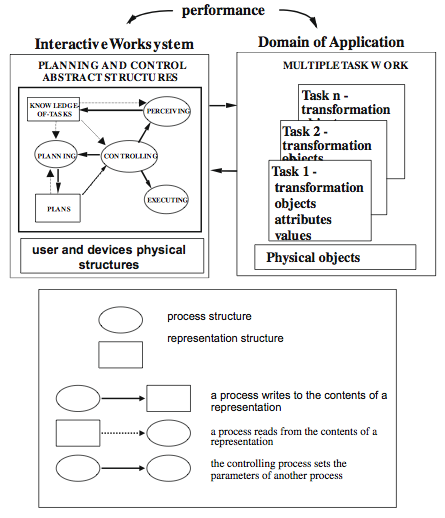

The UCL Conception defines Interactive Work Systems (IWS) as cognitive systems whose scope encompasses two types of participant, people and IT artefacts, interacting to perform tasks in a domain. ‘Domains’ are composed of abstract or physical objects whose attributes may be mutable. Tasks are activities that are concerned with changing these domain object attributes. Organisations express their requirements for changes to a domain through the specification of goals. A ‘product’ goal is scoped towards a domain and is the intention to change several attributes and objects in the domain. A product goal breaks down into a number of ‘task’ goals that alter individual attributes. Different forms of task are recognised notably interactive, offline, automated (Dowell and Long, 1989; Lim and Long, 1994) and enabling (Whitefield et al., 1993). With the later being tasks that put the IWS into a state where it can be used (e.g. booting, opening applications). Each participant of an IWS (i.e. person or IT artefact) of an IWS has ‘structures’ and ‘behaviours’ that support task performance. ‘Structures’ provide capabilities in reference to a participant’s environment and can be physical or abstract. ‘Behaviour’ is the activation of structures to execute the changes in the IT artefact and domain. Structures are physical (e.g. electronic, neural, biomechanical and physiological) or abstract (e.g. software or cognitive representational schemes and processes). Similarly, behaviours may be physical, such as printing to paper or selecting a menu, or abstract, such as deciding which document to open, or problem solving. The many-to-one mapping between people’s structures and their behaviours allows the production of different behaviour from the same physical and psychological structures.

Comment 12

The Dowell and Long (1989) HCI conception is well described here. However, it is worth noting that: (1) interactions perform tasks with some degree of effectiveness, not just perform them; (2) domain objects are physical or physical and abstract, rather than physical or abstract; (3) at least some domain object attributes are necessarily mutable (otherwise no ‘work’ can be performed, that is, no object attribute transformations can be made by the interactive worksystem); (4) structures are physical or physical and abstract, rather than physical or abstract (as indeed are domain objects – see (2) earlier.

Dowell and Long (1989) view effectiveness (later termed performance) as a function of task quality (the quality of a ‘product’ created by an IWS) and resource costs (the costs to participants of establishing structures and producing behaviours). Desired effectiveness is set by specifying desired task quality and the desired resource costs. Similarly, actual effectiveness can be measured as a function of the actual task quality and the actual resource costs (Dowell and Long, 1998, p. 139). Task quality pertains to goals, that is, what do people and organisations want from a domain (e.g. speed, vs. quality). Structural resource costs relate to costs of setting up and executing structures and behaviours of both IWS elements to carry out tasks. Whilst behavioural resource costs are those incurred for actual task performance. There has been no attempt to produce – or link to – a taxonomy of development costs, beyond the distinction between structural and behavioural costs.

Comment 13

Again, this is a good description of the Dowell and Long (1989) Conception for HCI. However, it is worth noting, that: (1) effectiveness and performance are often interchangeable; but in all cases a function of Task Quality and Resource Costs, as stated by Wild; (2) people and organisations desire changes to domain objects (for example, slow versus fast (that is, speed) or high versus low (that is, quality); and (3) structural and behavioural resource (that is, set-up) costs can be quantified (as well as distinguished).

Dowell and Long view the domain as a distinct part of an IWS’s environment – it is the world in which tasks originate, are performed, and have their consequences. The domain characterisation is ‘oriented to objects’. Domains are composed of abstract and physical objects with attributes. These attributes have a state that may be able to change. Attributes can be physical or abstract, and may need to be inferred when the domain is studied. Objects can have both abstract and physical attributes. Printed texts, have abstract properties that support the communication of messages and physical properties that support the visual representation of information (Dowell and Long, 1989), there is therefore a coupling between the domain objects and entities with Structures and Behaviours capable of perceiving their affordances. Dowell and Long (1989) maintain that levels of complexity emerge amongst attributes at different levels of analysis. Attributes that emerge may subsume those that arise out of lower levels; thus, a printed text could be a letter, tax return or an instructional text. Dowell and Long (1989) stress that objects be described at an appropriate level, but give no indication of how to do this.

Comment 14

An instance of domain modeling, including the appropriateness of levels of description, can be found in Hill’s paper on the Emergency Management Combined Response System (2010).

Their work has emphasised physical changes and cognitive processes (i.e. changes to information and knowledge), little is said about social or emotional changes.

Comment 15

Conceptions can be judged in terms of their completeness, coherence, and fitness-for-purpose. The reasons given by Wild for not being able to apply the Dowell and Long (1989) conception directly to services suggest its incompleteness (but do not exclude its non-coherence). Whether its fitness-for-purpose is appropriate or not depends on whether Wild wants to use it for understanding (Services Science) or design (Service Engineering). See also Comment 3.

2.2.1. Relating the UCL Conception to services research

We now consider how the entities within UCL Conception relate to varying strands of services research. Table 1 provides a comparison of core concepts of the UCL Conception with certain strands of services research across different disciplines.

Comment 16

Mapping two conceptions or frameworks to each other is a non-trivial matter. However, it is essential, if HCI researchers are to build on each other’s work, as Wild does here. It is, thus, worth considering some of the issues raised.

First, given two conceptions A and B, there are three possible mappings: (1) A to B; (2) B to A; and (3) C to A and B. (1) and (2) appear to be the same; but differ as to the conception assimilated (B in (1) and A in (2)). In (3), A and B are both assimilated to C and the latter is carried forward.

Second, mapping may be carried out by equivalence ( in (1) and (2), some or all concepts in A may be the same as, or equivalent to, some or all concepts in B). Mapping may also be carried out by generification (in (1) and (2), some or all concepts in A may have some features of some or all concepts in B. Lastly, mapping may be carried out by abstraction ( in (1) and (2), some or all concepts in A may be abstractions of some or all concepts in B).

Third, different conceptions may have been developed, consistent with different criteria, for example, consistency; coherence; and fitness-for-purpose. Normally, the criteria of the assimilating conception are the ones carried forward.

Wild claims that there are ‘overlaps between concepts within services research and the (Dowell and Long) conception’. This claim suggests the potential for a generification-type relation between the two sets of concepts. However, closer examination of Table 1 indicates an informal equivalence relationship, for example, (work as) tasks and services as tasks; but with exceptions, for example, people as co-creators, as well as worksystem components. The relationship can only be informal at best, because services research embodies several conceptions, for example, Service Blue Printing and Service Dominant Logic. The mapping is, thus, many-to-one, rather than one-to-one.

Given these overlaps between concepts within services research and the conception at first sight, the concepts could provide framework to situate and relate the various disciplinary strands of service research. However, we argue that the Dowell and Long’s work cannot be used as-is to represent and relate strands of services research and model service systems. The reasons are varied and include: services exist in a wider environment (Section 3.1.1); effectiveness judgements are dependent on values (Section 3.1.2); service demands a richer notion of people (Section 3.1.3); different kinds of abstract objects need to be represented and reasoned about (Section 3.1.4); the relationship between a core and service system needs to be represented (Section 3.1.5); and going beyond engineering (Section 3.1.6). We ask the reader to bear with us whilst we provide an overview of the ABFS. Which then allows us to address and expand on these points.

3. The activity based framework for services (ABFS)

Working from the view that services are consistently defined as activities – rather than objects or artefacts – the concepts (e.g. Roles, Domains, Actants, Artefacts, Goals, Tasks) of the ABFS are drawn from activity modelling approaches, such as task analysis (Diaper, 2004), domain and process modelling (Dowell and Long, 1989, 1998; Melão and Pidd, 2000), and soft systems methodology (Checkland and Poulter, 2006). This synthesis produced a framework that can relate together the disparate streams of service research (Wild et al., 2009a) and help classify the design Foci (i.e. what is being designed) of service design approaches (Wild et al., 2009b).

Comment 17

Wild’s claim that the ABFS framework was synthesized from task analysis, domain and process modeling and soft systems methodology is not inconsistent with Comment 16, which suggests an informal equivalence relationship between the framework and the Dowell and Long Conception. Note also the design orientation of the framework (rather then (scientific) understanding – see also Comments 2 and 3).

- Tasks: the majority of service definitions class services as tasks, but they are perhaps most prominent in the Service Blueprinting approach (Shostack, 1984). There are classifications of service tasks, focussing around organisational division (Shostack, 1977); economic relationships (Hill, 1977, 1999); the relationship to a product (Tukker, 2004) or a more ‘general’ consideration of their nature (e.g. Lovelock, 1983)

- Goals: are not a first class entity in most approaches to services, but can be tacit in Service Blueprinting and in discussions of value (Flint, 2006; Vargo and Lusch, 2004a)

- People: are considered as co-creators of value in the Service-Dominant Logic (Vargo and Lusch, 2004a); are implied by lines of visibility in Service Blueprinting; and within the IHIP qualities are implied by Inseparability, and their role in service paradigms such as Nonownership (Lovelock and Gummesson, 2004)

- IT artefacts: the Service-Dominant Logic argues that software, along with tangible products and services are a mechanism for delivering benefit to another party, this mirrors work in computing/information systems that promotes a view based on Software-as-a-Service (Bennett et al., 2000). Beyond such a high level and general statements there is little work that examines the overall role of IT artefacts in service within the services community

- Domain: alongside tasks, domains have a close conceptual correspondence to the IHIP debates and refinements. In Section 2.1, we noted that there is a distinction between tangible and intangible products and tangible and intangible activities. We argue that there is a correspondence between intangible and tangible activities and products, and concrete and abstract IWS tasks and domain objects

- Structures and behaviours: are covered in the SDL as the resources (operand and operant) different parties bring to service exchanges. Often the terms are used loosely in other services research, and more importantly in a non-systemic manner

- Effectiveness, is timeand cost-oriented in Service Blueprinting, and whilst work service quality evaluation has often focussed on perceived or experienced Reliability; Assurance; Tangibles; Empathy, and Responsiveness (Seth et al., 2005), with some later work focussing on personal values (Lages and Fernandes, 2005). In Services Marketing, generally revenue and profitability have been high-level effectiveness measures

|

Table 1: Comparing the concepts of the UCL Conception to services research strands.

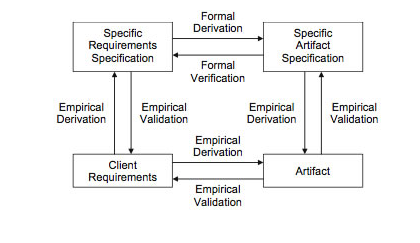

The ABFS is represented schematically in Fig. 1 service activities are carried out within a service system. The system embraces the objects (both abstract and physical); the goals and values held by various individual and collective Actants. Activities are carried out by actants and artefacts (both IT and non IT) to affect the objects in a domain. Not illustrated in the representation is the potential for an overlap between the domain and actants – artefacts. This overlap captures potential recursive relationships between people and domains (e.g., self directed education), and the distinction between coherence and correspondence domains (Vicente, 1990), that is, domains that ‘exist’ virtually within an IT artefact (e.g. 3D graphics, the internet).

A service system can be considered to have a variety of success measures, depending on the value (i.e. benefit) sought, and this is evaluated when the quality goals are balanced against the resource costs. Resource costs include affective, socio-cultural costs, as well as the consideration of the physical environment discussed by Stahel (1986). A service system has an environment, which has sociocultural and physical dimensions. Borrowing from Dowell and Long (1998), we suggest that actants and artefacts have structures and behaviours. Long and Dowell’s concepts generalise and we assume a wider set of structures and behaviours, namely the physical and socio-cultural (Stahel, 1986). Thus, we assume physical (ecosupport system, toxicology system, flows-of-matter system), and socio-cultural (Elster, 2007; Hall, 1959) structures and behaviours alongside those of artefacts and individuals (i.e. the IWS). The costs of setting up and maintaining these structures and behaviours are evaluated in service success assessments. This in turn depends upon the set of values that can be identified as applicable to the service system.

Comment 18

Wild suggests that service systems have ‘physical socio-cultural environments’. Further, that there are physical and socio-cultural structures and behaviours, as for artefacts and individuals. The question then arises, as to how and by what ‘affective, socio-cultural costs’ are incurred. Figure 1 is not clear on this point.

A comparison of the ABFS concepts against specific streams of services research can be made, in Table 2, (adapted from Wild et al., 2009a) presents our assessment of the depth of consideration of varying approaches against the core concepts of the ABFS.

Comment 19

As well as the ‘depth of consideration of varying approaches against the core concepts of the ABFS,’ it would be interesting to identify the actual concepts, employed by these same varying approaches. Such a listing, in conjunction with the Dowell and Long Conception would support the address of some of the issues, concerning mapping between conceptions, raised by Comment 16.

Several additions and considerations mark a departure from the Dowell and Long’s concepts, due to the need to engage with the services literature and with modelling service systems. Notable divergences and additions are: the inclusion of artefacts other than computers; the inclusion of a physical and socio-cultural environment; the inclusion of values; the reintroduction of affective and conative resource costs; an environment other than the domain, and the potential overlap between the domain and actants or tools.

The original purpose of the ABFS was to relate different strands of services research (see Wild et al., 2009a). However, recent work has started to examine the framework as a modelling approach. Specific interest is modelling service systems for complex engineered products, and the transitions to different configurations, possibly more ‘effective’ ones. The aim is to produce high-level and integrative models that illustrate: the systemic nature of service systems; the distinction between different kinds of domain; how different values affect the overall structure of a service system. An ABFS ‘model’ can be considered an elaborated representation of a Human Activity System (see Checkland and Poulter, 2006), and act as a general system model.

3.1. ‘Barriers’ to moving the UCL Conception towards services

Having provided an outline of the ABFS including details of the work we draw upon we examine the barriers within the UCL Conception to its use raw as a framework for relating wider strands of services research and providing models of service systems.

3.1.1. Service activities exist in a wider environment

The environment tends to become a catch-all in activity-based approaches, covering all the things that are not first class entities in their modelling worldview. Those with a systems orientation may try and draw a boundary around the core system of interest.

Comment 20

Dowell and Long (1989) indeed propose such a boundary: ‘The worksystem has a boundary enclosing all user and device behaviours, whose intention is to achieve the same goals in a given domain. Critically, it is only by defining the domain that the boundary of the worksystem can be established’. Elsewhere, Dowell and long (1989) propose that ‘a domain of application may be conceptualized as: ‘a class of affordance of a class of objects’. Taken together, the two proposals can be considered sufficient for drawing ‘a boundary around the core system of interest’, as muted by Wild.

However, boundaries are rarely easily defined (Mingers, 2006), existing across both socio-cultural, physical and computational levels and often being observer dependent (Checkland and Poulter, 2006; Mingers, 2006). In the UCL Conception, the domain is the only ‘environment’ represented, thus, whilst it is ecological, it is weakly so.

Comment 21

In Dowell and Long (1989 and 1998), the strong ecological relationship is between the worksystem and the domain. They write: ‘The worksysyem clearly forms a dualism with the domain: it therefore makes no sense to consider one in isolation of the other.’ Wild is presumably referring to a different type of ecological relationship.

Outside of the domain or IWS nothing is discussed about context. How the domain is distinguished from the general environment is related to goals – they refer to desired changes in part of the world but what processes demarcate the domain are not clear.

Comment 22

Dowell and Long (1989) are very clear about the demarcation of the domain from the general environment: ‘The domains of cognitive work are abstractions of the ‘real world’, which describe the goals, possibilities and constraints of the environment of the worksystem.’ For ‘real world’, we can read ‘general environment’ and for ‘environment’ we can read ‘domain’. The ‘real world’ or ‘general environment’ is not separately specified, other than by its expression in the domain. Wild is, thus, correct, that the Dowell and Long conception specifies only one environment – that of the domain.

Table 2: Comparison of the ABFS concepts against specific streams of services research

Dowell and Long (1998 p. 130) admit that a domain cannot be completely formalised, but it is not clear whether the domain’s boundary with the rest of the world is open or closed or something in between.

Comment 23

The formality, with which a domain can be expressed, depends on the ‘hardness’ of its design problem (Dowell and Long, 1989). They argue: ‘…..the dimension of problem hardness, characterising general design problems, and the dimension of specification completeness, charaterising discipline practices, constitute a classification space for design disciplines….’

We argue that to embrace services research it is important to represent not just the immediate environment of interest to services activities (i.e. the domain), but the wider environment within which activities are carried out. Here we find that HCI approaches can benefit from environmental concepts within services research strands such as the functional economy (e.g. Stahel, 1986). Within our own work, a secondary environment was synthesised by drawing on Stahel’s (1986) work on the Functional Economy, along with other work (Elster, 2007; Hall, 1959). This secondary environment is assumed composed of a socio-cultural system, along with a physical system.

Comment 24

According to Dowell and Long (1989): ‘The worksystem has a boundary, enclosing all user and device behaviours, whose intention is to achieve the same goals in a given domain. Critically, it is only by defining the domain that the boundary of the worksystem can be established…..’ No ‘secondary environment’, in Wild’s sense is postulated, as such. However, some of its features, for example, physical and socio-cultural ones, could be expressed in terms of the worksystem and the domain.

The former is discussed and the intellectual frameworks we drew upon are discussed in the presentation of the ABFS. The latter breaks down into: the eco-support system for life on the planet (e.g. biodiversity); the toxicology system; and the flows-of-matter system, especially as they relate to recycle and remanufacture decisions. Both elements provide potential sources of quality and resource cost measures (e.g. social coherence and pollution) for judging the effectiveness of service systems.

3.1.2. Effectiveness judgements are dependent on values

Effectiveness is taken to refer to whether activities are achieving some higher level or long term goal (see Checkland and Poulter, 2006, pp. 42–44), and we assume the term was originally chosen to distinguish it from efficiency (using resources well); and efficacy (that the activity is working).

Comment 25

According to Dowell and Long (1989): ‘Effectiveness derives from the relationship of an interactive worksystem with its domain of application – it assimilates both the quality of the work performed by the worksystem and the costs incurred by it. Quality and cost are the primary constituents of the concept of performance, through which effectiveness is expressed.’

Values are the criteria with which judgements are made about other entities (Checkland and Poulter, 2006). There has been a steady increase in the use of values and related terms such as value, quality, choice and worth (e.g. Cockton, 2006; Karat and Karat, 2004; Light et al., 2005). Cockton (unpublished) notes that HCI’s original quest to be a scientifically and engineering oriented discipline led it to background values, and not examine its own values as a discipline.

In our context, our interest is a little more mundane with the impact that different value sets and value choices have on the configuration and judgement of service systems. Values affect how actants view the effectiveness of service system configurations that are acceptable and unacceptable (e.g. labour and time savings vs. social cohesion and fight reduction). For example, placing high value on one’s carbon footprint can lead to transport choices such as cycling and walking which may be in a trade-off situation with time use goals and values. Alternatively, certain values and their trade-offs may lead to alternative behaviours such as carbon offsetting, using renewable fuel and lift/car share schemes.

Values are key aspect of scoping the other elements of a service system. The form of the service system can reflect the values held by its actants. Whilst a high-level goal of restaurants is to provide food and generate profit; the values held by the owners, staff and patrons can drive radically different manifestations of eating location, menu, and experience. Comparing a high-class joint with a roadside catering outlet without reference to values would be meaningless. Yet their basic transformations and domain objects remain remarkably similar: the preparation and serving of foodstuffs.

Comment 26

The similarity resides only in the high level of description. Major differences would appear, following Dowell and Long (1989), at lower levels of the description of the worksysyem and the domain.

Some individuals and groups see their values as true and objective, those who do not share your values are classed as having none (Beck and Cowan, 1996; Goodwin and Darley, 2008). Witness the view by many in the ‘environmental’ movement that shortterm non-resource renewing profit driven enterprises have no values. Such enterprises have values, but they are focussed on assessing effectiveness by profitability, capital liquidity, rather than sustainability. It becomes a misnomer to claim to be placing human values at the core of the HCI discipline (see Harper et al., 2008), as all our values are human. A human valuing technological progress and subscribing to technological determinism – whilst potentially passé – is still holding and enacting human values, to claim otherwise would be claiming a truly objective and non-human position on values. The issue is whether those values are reflected in the design process and in some way their maintenance, or desired change is supported by the entities (artefacts, processes, social structures) we design, and whether and how design methods and entities promote the reconciliation of differing valuing systems.

Comment 27

This point is hard to dispute and indeed, remains a challenge for HCI. The dualism of the worksystem and the domain, following Dowell and Long (1989), is able to support the expression of such values for design purposes.

With respect to services one key trend in recent years has been the emergence of availability and capability contracts (Terry et al., 2007; Tukker, 2004). These go beyond outsourcing to contractual arrangements where two or more partners work together to deliver services. In many contexts, this brings commercial and noncommercial organisations together with a potential for clashes of values, the most obvious being when public sector services interact with commercially oriented organisations. Furthermore, these arrangements rely on the service recipient providing facilities back to its supplier, with both parties acting as supplier and recipient of services.

In other contexts, service design is tackling the design of public services, another form and context that can bring together different actants and values, from the efficiency driven targets beloved by bureaucrats and politicians to those concerned with retaining, or promoting broad and difficult goals such as community cohesion and sense of community participation (Parker and Heapy, 2006; Seddon, 2008). If HCI’s and service science’s frameworks cannot acknowledge the existence of values in relation to the effectiveness of activities and artefacts, they will be impoverished. Promoting values to a first class entity within HCI and services frameworks opens them up to a richer set of considerations about human action. Recognising that values exist in relation to goals, activities, desired and actual transformations adds more depth to the characterisation of values. They are not discussed context free, with the ”it all depends on” caveat, they are scoped in reference to actions within a domain and an environment.

Comment 28

This is a good point and a hard one to dispute. For the difference between domain and (secondary) environment – see Comment 24.

3.1.3. Service demands a richer notion of people than the UCL Conception provides

Dowell and Long (1989) originally classed resource costs as being cognitive conative (motivational), and affective (emotion).

Comment 29

To be precise, ‘conative costs’, according to Dowell and Long (1989): ‘relate to the repeated mental and physical actions and effort required’ by interactive behaviours, performed to achieve some goal. In this sense are they ‘motivational’.

The later paper (Dowell and Long, 1998) dropped affective and conative resource costs. In our work, our ‘enrichment’ of the conception of people has been twofold. In the first case the recognition that values play an important role in shaping goals and effectiveness criteria and in scoping wanted and unwanted resource costs of a service system (see Section 3.1.2). The second is that the notion of resource costs and quality measures can embrace conative, affective, and socio-cultural issues.

Comment 30

The ‘enrichment’ of the Dowell and Long (1989) conception of people (‘users’), claimed by Wild, may be one of application; but not of substance. ‘Values’ and ‘socio-cultural issues’ can both be represented in terms of domain transformations, enacted by the worksystem behaviours, if the representation is part of the design requirement – see also Comment 24.

The general argument for structures and behaviours can be expanded to cover emotional, sociocultural issues. Work by luminaries such as Teasdale and Barnard (1993), Elster (2007), Hall (1959), and Beck and Cowan (1996), Cowan and Todorovic (2000) suggests phenomena akin to structures and behaviours. However, the nature of these additional structures and behaviours both differs from, and interrelate with cognitive ones. They can probably be assumed to exist at different emergent levels of reality (Mingers, 2006) and vary in their objective, intersubjective and subjective qualities (Heylighen, 1997; Mingers, 2006). For most purposes activities can be seen to be simultaneously affective, cognitive and in some way socio-cultural. Whilst it makes the implementation of an engineering vision for HCI harder to achieve as prescribed by Long (Long and Dowell, 1989) it should lead to a broader understanding of how different kinds of structures and behaviours are involved in understanding the effectiveness of activities; for example, how a service deemed to achieve its goals efficiently and could fail experientially, socioculturally or incur physical environmental costs that cannot be sustained and vice versa.

3.1.4. Different kinds of domain objects need to be represented and reasoned about

The domain concept provides a useful abstraction for the modelling of service systems. What are the objects?, what is their nature?, are simple questions that force the analyst to grapple with what the service activities will be ‘doing’. Allowing us to consider similarities and differences between different service contexts Dowell and Long (1989) stressed that objects be described at an appropriate level, but give no indication of how to do this.

Comment 31

Dowell and Long (1989 and 1998) illustrate how domain object/attribute/states can be expressed. Worked examples of domain modeling can be found in Hill (2010); Stork (1999); and Cummaford (2007).

A legitimate goal of an activity could be to affect emotional, socio-cultural ‘objects’, for example entertainment, education and health services all alter personal or socio-cultural entities (Hill, 1977). In theory, the domain concept can generalise to cover alternative kinds of objects. Hence, there needs to be the recognition that ‘objects’ in the domain could have properties that are not just physical (e.g., material or energy) and information or knowledge. There is ambiguity about the status of social and affective issues in Long’s work. Green (1998) noted that Dowell and Long’s work in air traffic control covers social issues; but they are not considered as first class concepts within the UCL Conception. Nor are they explicitly discussed when discussing the determinism boundary (Dowell and Long, 1989). In turn affective and conative resources costs were covered in the first paper (Dowell and Long, 1989), but later dropped (see Dowell and Long, 1998).

Comment 32

Affective and conative costs, as part of an expression of worksystem performance, remain as part of the Dowell and Long conception (1989). They were not referenced in the 1998 paper, whose specific expression was oriented towards ‘cognitive engineering’, rather than HCI.

There is a requirement to be able to model, and reason about, emergent properties within a domain that are motivational, emotional, and socio-cultural.

Comment 33

Domain objects can be decomposed into cognitive, conative, and affective attribute states, as required by the work, performed by the worksystem. Socio-cultural attribute states might constitute higher levels of description of these states or indeed additional objects, as required by the product goals of the worksystem. User costs can also be decomposed. See also Long (2010) for the example of a computer games ‘fun’ interactive (work) system.

Hence, we suggest that activities can aim to alter emotional, socio-cultural, states along with the informational and physical objects. Despite views to the contrary, advice on how this could be achieved cannot be found in work on analysis patterns or domain modelling (e.g. Fowler, 1997; Sutcliffe, 2002), which share similar modelling concepts, but give little consideration on the different ‘kind’ of domain objects altered, and they should therefore be modelled.5 The development of a computer based representation of a domain, is the creation of a representing world, however these representing worlds are not grounded in a perspective that can handle different ‘kinds’ object with any theoretical depth. They provide no theory to allow us to distinguish between situations where, for example, fun is an evaluative criterion alongside others (e.g. work applications); where it is the goal of the activity (games and theme parks); or it is balanced against other factors such as knowledge gained (e.g. modern interactive museums).

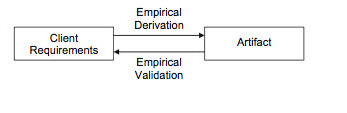

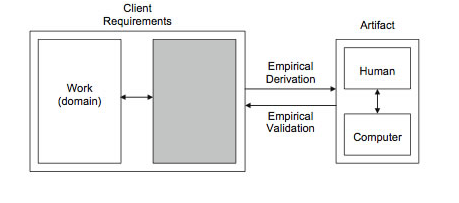

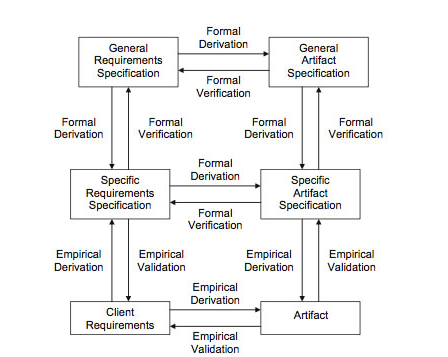

3.1.5. Core system, service system

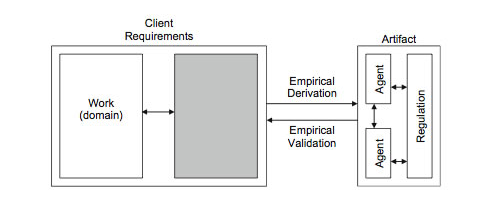

When modelling service domains, we need to represent the overlap between at least two different Human Activity Systems (HAS). Service systems are set up to support another human activity system, hence we posit a relationship between core-system and one or more service systems, and Fig. 2 illustrates the distinction. The darker boxes represent part of the Core human activity system, with the service system, represented by the lighter boxes. The actants, artefacts, and sometimes aspects of the domain become the domain of the support system. The support system will utilise its own tools and artefacts in support of the core system. It may however share artefacts, actants, and activities with the core system. Fleet planning and forecasting of assets are example of such shared activities.

In reality, the resulting model may be more complex than the structure represented in Fig. 2. Many contemporary service contracts are complex, in terms of both the core domain (e.g. healthcare, armed forces); but also the complexity of the contractual arrangements: involving multiple partners, long time scales and complex payment and reward mechanisms (Terry et al., 2007). There may also be a chain of service systems in a series of core/support relationships, the service support may draw on other service systems such as IT or payroll.

Comment 34

The reader should be reminded at this point, that the Dowell and Long (1989 and 1998) conception is intended to specify design problems and to support the search for design solutions. It is not, then, simply a general, all-purpose representational conception. The relationship between core and support systems needs to be specified and tested against design requirements and the possibilities of their satisfaction.

Fig. 2. Overlapping systems (core and service).

The distinction between core and support system could be modelled by including a rich set of ‘parallel’ enabling tasks (Whitefield et al., 1993), and expanding the range of (enabling) tools and domain objects. We argue that for most significant modelling exercises the use of the enabling task concept masks the importance of distinguishing a relationship between different systems undertaking different roles. The notion of a core and service domains allows the representation of issues such as clashes in values and visibility, in reference to different kinds of activity (e.g. physical maintenance, forecasting, and education), artefacts, and domain changes. However, modelling approaches are often used by different modellers in different ways (Melão and Pidd, 2000), the enabling tasks approach may also be suited to illustrating differences between self services and services provided by another party.

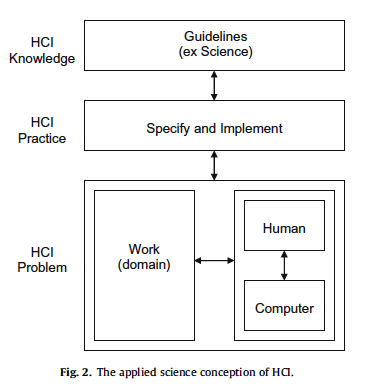

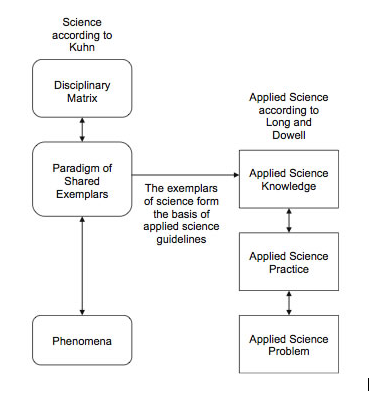

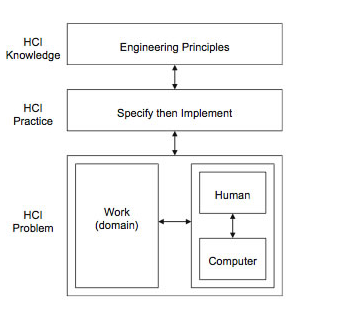

3.1.6. Going beyond engineering

Whilst the HCI 89 keynote (Long and Dowell, 1989) offers alternative perspectives such as HCI as craft and applied science; and acknowledge that HCI will provide knowledge embodied as principles, heuristics, and guidelines. Long’s work is probably most heavily associated with the promotion of an Engineering perspective on the development of IT artefacts (e.g. Dowell and Long, 1989, 1998; Lim and Long, 1994) and handling of human factors from an engineering perspective, such as specify and implement, and the development of engineering principles (Long and Dowell, 1989). It remains an open question whether ‘engineering’ principles can be widely developed. There are few in existence (see Cummaford, 2000; Johnson et al., 2000), and their development takes considerable resources.

Comment 35

Limitations on the development of design principles can be found in Dowell and Long (1989), Figure 2: A Classification Space for ‘Design Disciplines’ and in particular, for an Engineering Discipline of Human Factors.

HCI has expanded its focus to embrace social, hedonic, and experiential concerns (Hassenzahl et al., 2000; McCarthy and Wright, 2004) and focussed on the support of community and family activities (Harper et al., 2008) this engineering perspective has begun to look in some way problematic (e.g. Hassenzahl et al., 2001; Sengers, 2003). There is much to be said for an engineering perspective and none of what follows such be taken as a dismissal of the power of the engineering approaches. However, what we need to be aware of are situations where the overall success of service design and delivery will be dependent on the interplay of factors that are quantifiable, and repeatable, as well as personal, experiential and socio-cultural factors, that may be more tacit, subjective and variable.

Comment 36

If social, hedonic and experiential concerns can be specified, either as part of the domain or of the worksystem, or both, the Dowell and Long conception (1989 and 1998) can express any associated design problem (within the limits set out in Figure 2(1989)) and support the search for a design solution – see Long (2010) for the example of a computer games ‘fun’ interactive (work)system. The reverse holds, if the social, hedonic and experiential concerns cannot be specified. The latter is assumed to be the case for ‘tacit, subjective and variable’ concerns.

A service or IT artefact that makes the required transformations effectively and efficiently can still be considered a failure if the customer, whether individual or organisational, has been subject to issues such as: over or under inclusion in decision making; reduced cohesiveness of social structures; reduced visibility; or recipients left feeling belittled, redundant, or deskilled by the service professionals. In addition, the failure to address such softer issues can lead to a breakdown in being able to obtain data about traditional quality measures and processes: data may not be recorded and passed on, equipment may be mishandled and shared assets may be withheld. Conversely, a service recipient can be made to feel welcomed, at ease, and involved, but without technical competence in executing the service, the service could still fail. For example well treated, but misdiagnosed patients, or service recipients being involved in decision-making processes that fail to fix faults in equipment.

Comment 37

If the ‘softer issues’ cited cannot be specified, in terms of the Dowell and Long conception (1989), the associated design problem can only be addressed by experiential (craft) design knowledge, using ‘implement and test’ design practices (see Figure 2).

When we move towards services, many services are carried out in distressing, and ultimately unwanted circumstances. A designer or researcher coming in and declaring either an old school (HCI as engineering of efficiency, errors and measurement of satisfaction) or new school (HCI as promotion of hedonic measures)6 approach should receive short shrift. Assessments about the effectiveness of such services need to balance this factor against other measures of effectiveness. Questions such as these cannot be handled within a single perspective dominated by engineering and work concerns (i.e. Dowell and Long, 1989, 1998; Long and Dowell, 1989),

Comment 38

Hedonic issues, which can be specified, can be conceptualized, following Dowell and Long (1989). See also Comment 36

.

but equally someone viewing interaction as maximising hedonic issues would also fail. This is a subtly different proposition from preventing the decline of an emotional state – which could be a laudable goal of such service types (e.g. counselling services) – and the promotion of a hedonic experience. However, we maintain that much of the spirit of the concepts outlined in the Dowell and Long’s work generalise, particularity the explicit notion of structures and behaviours and the implicit notion of trade-offs in the design of systems.

Comment 39

The trade-off in design between ‘Task Quality’ and ‘User (Resource) Costs’ is recognized throughout Dowell and Long (1989 and 1998).

3.2. Illustrative examples

Within this section, we provide illustrative examples. The first is a simple illustration taken from a novel by Gemmel; the second presents an initial ABFS for a Family’s transport choices, given the particular value set they wish to enact.

3.2.1. A literary example

An implicit issue that can be drawn out the UCL Conception characterisation of task qualities and resource costs, is that trade-offs can be made between different system configurations. The inclusion of a wider set of costs allows the representations of wider trade-offs, both as design options within a single IWS-domain coupling and in relation to different classes of application domains. In some situations, tasks/goals are harder to learn/achieve, but provide greater enjoyment (e.g. games); others are more efficient in terms of achieving the goal, but have a different ‘affective profile’. An IT artefact could be made more efficient, but at great cost in terms of the processing power and memory it needs, thus additional tasks are allocated to the user in the IWS.

A literary example of these trade-offs can be provided by Gemmel. ” ‘A wagon and a single driver would be more effective, surely?’ Observed Skilgannon . . . Landis smiled . . .. ‘In the main however they just bring food. You speak of effectiveness. Yes, a wagon would bring more supplies, more swiftly, with considerable economy of effort. It would not, though, encourage a sense of community, of mutual caring.’ (Gemmel, 2004, p. 52)”. Within this example, delivery of meals to the loggers in the story is done by the women of the local town, some married to the loggers, some not. Leaving aside the somewhat old-fashioned gender roles – this arrangement whilst less efficient than one man and a wagon – is continued because it promotes social cohesion, the presence of the women means less fights between loggers occur, and new relationships are formed. Thus, the domain can be seen to embrace both the physical changes of food preparation, but also affective and social states. Within this book, this form of catering arrangement leads to a key relationship between two characters, one that shapes the drive and motivation for one of those characters throughout the remainder of the story. These forms of decisions and trade-offs surround and permeate many activities both social and work based (see Wakkary, 2005; Wild et al., 2003). With a wider set of quality assessment criteria and resources to consider we can investigate the trade-offs that can be made when setting up a service system, rather than the narrower conception of IT effectiveness just physical and cognitive changes and costs.

Comment 40

Dowell and Long (1989 and 1998) set no limits on the ‘set of assessment criteria, objectives and resources to consider’. The limits are set by the ‘design problem’, which their conception is used to express, that is, the difference between actual and desired performance. In the case, cited by Wild, if the woman-wagons interactive worksystem performed either ‘food delivery work’ (domain object: food; attribute: delivery; states: delivered/undelivered) or ‘social cohesion work’ (domain object: social cohesion; attribute: promotion; states: promoted/unpromoted) or both types of work, either with a lower Task Quality or more Resource (cognitive, conative or affective) Costs or both, than desired, then a design problem would exist. The Dowell and Long conception would be appropriate for expressing such a design problem, as illustrated (see also Hill’s paper (2010) on the issue). No limits on domain objects are set, as such. It suffices that they can be specified as physical and abstract object/attribute/states, whose transformation constitutes the work of some worksystem at some level of Task Quality and User Costs. See also Comment 36.

3.2.2. Family travel services

Our next example uses the following scenario to drive ABFS models of services: